When re-retrieving hook tasks from the DB double check if they have not

been delivered in the meantime. Further ensure that tasks are marked as

delivered when they are being delivered.

In addition:

* Improve the error reporting and make sure that the webhook task

population script runs in a separate goroutine.

* Only get hook task IDs out of the DB instead of the whole task when

repopulating the queue

* When repopulating the queue make the DB request paged

Ref #17940

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Unfortunately #21549 changed the name of Testcases without changing

their associated fixture directories.

Fix#21854

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR adds a context parameter to a bunch of methods. Some helper

`xxxCtx()` methods got replaced with the normal name now.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

- It's possible that the `user_redirect` table contains a user id that

no longer exists.

- Delete a user redirect upon deleting the user.

- Add a check for these dangling user redirects to check-db-consistency.

The doctor check `storages` currently only checks the attachment

storage. This PR adds some basic garbage collection functionality for

the other types of storage.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fix#19513

This PR introduce a new db method `InTransaction(context.Context)`,

and also builtin check on `db.TxContext` and `db.WithTx`.

There is also a new method `db.AutoTx` has been introduced but could be used by other PRs.

`WithTx` will always open a new transaction, if a transaction exist in context, return an error.

`AutoTx` will try to open a new transaction if no transaction exist in context.

That means it will always enter a transaction if there is no error.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: 6543 <6543@obermui.de>

Related #20471

This PR adds global quota limits for the package registry. Settings for

individual users/orgs can be added in a seperate PR using the settings

table.

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Close https://github.com/go-gitea/gitea/issues/21640

Before: Gitea can create users like ".xxx" or "x..y", which is not

ideal, it's already a consensus that dot filenames have special

meanings, and `a..b` is a confusing name when doing cross repo compare.

After: stricter

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: delvh <dev.lh@web.de>

_This is a different approach to #20267, I took the liberty of adapting

some parts, see below_

## Context

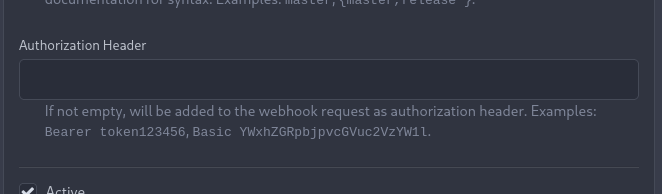

In some cases, a weebhook endpoint requires some kind of authentication.

The usual way is by sending a static `Authorization` header, with a

given token. For instance:

- Matrix expects a `Bearer <token>` (already implemented, by storing the

header cleartext in the metadata - which is buggy on retry #19872)

- TeamCity #18667

- Gitea instances #20267

- SourceHut https://man.sr.ht/graphql.md#authentication-strategies (this

is my actual personal need :)

## Proposed solution

Add a dedicated encrypt column to the webhook table (instead of storing

it as meta as proposed in #20267), so that it gets available for all

present and future hook types (especially the custom ones #19307).

This would also solve the buggy matrix retry #19872.

As a first step, I would recommend focusing on the backend logic and

improve the frontend at a later stage. For now the UI is a simple

`Authorization` field (which could be later customized with `Bearer` and

`Basic` switches):

The header name is hard-coded, since I couldn't fine any usecase

justifying otherwise.

## Questions

- What do you think of this approach? @justusbunsi @Gusted @silverwind

- ~~How are the migrations generated? Do I have to manually create a new

file, or is there a command for that?~~

- ~~I started adding it to the API: should I complete it or should I

drop it? (I don't know how much the API is actually used)~~

## Done as well:

- add a migration for the existing matrix webhooks and remove the

`Authorization` logic there

_Closes #19872_

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: delvh <dev.lh@web.de>

I found myself wondering whether a PR I scheduled for automerge was

actually merged. It was, but I didn't receive a mail notification for it

- that makes sense considering I am the doer and usually don't want to

receive such notifications. But ideally I want to receive a notification

when a PR was merged because I scheduled it for automerge.

This PR implements exactly that.

The implementation works, but I wonder if there's a way to avoid passing

the "This PR was automerged" state down so much. I tried solving this

via the database (checking if there's an automerge scheduled for this PR

when sending the notification) but that did not work reliably, probably

because sending the notification happens async and the entry might have

already been deleted. My implementation might be the most

straightforward but maybe not the most elegant.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The OAuth spec [defines two types of

client](https://datatracker.ietf.org/doc/html/rfc6749#section-2.1),

confidential and public. Previously Gitea assumed all clients to be

confidential.

> OAuth defines two client types, based on their ability to authenticate

securely with the authorization server (i.e., ability to

> maintain the confidentiality of their client credentials):

>

> confidential

> Clients capable of maintaining the confidentiality of their

credentials (e.g., client implemented on a secure server with

> restricted access to the client credentials), or capable of secure

client authentication using other means.

>

> **public

> Clients incapable of maintaining the confidentiality of their

credentials (e.g., clients executing on the device used by the resource

owner, such as an installed native application or a web browser-based

application), and incapable of secure client authentication via any

other means.**

>

> The client type designation is based on the authorization server's

definition of secure authentication and its acceptable exposure levels

of client credentials. The authorization server SHOULD NOT make

assumptions about the client type.

https://datatracker.ietf.org/doc/html/rfc8252#section-8.4

> Authorization servers MUST record the client type in the client

registration details in order to identify and process requests

accordingly.

Require PKCE for public clients:

https://datatracker.ietf.org/doc/html/rfc8252#section-8.1

> Authorization servers SHOULD reject authorization requests from native

apps that don't use PKCE by returning an error message

Fixes#21299

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

When actions besides "delete" are performed on issues, the milestone

counter is updated. However, since deleting issues goes through a

different code path, the associated milestone's count wasn't being

updated, resulting in inaccurate counts until another issue in the same

milestone had a non-delete action performed on it.

I verified this change fixes the inaccurate counts using a local docker

build.

Fixes#21254

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

At the moment a repository reference is needed for webhooks. With the

upcoming package PR we need to send webhooks without a repository

reference. For example a package is uploaded to an organization. In

theory this enables the usage of webhooks for future user actions.

This PR removes the repository id from `HookTask` and changes how the

hooks are processed (see `services/webhook/deliver.go`). In a follow up

PR I want to remove the usage of the `UniqueQueue´ and replace it with a

normal queue because there is no reason to be unique.

Co-authored-by: 6543 <6543@obermui.de>

A lot of our code is repeatedly testing if individual errors are

specific types of Not Exist errors. This is repetitative and unnecesary.

`Unwrap() error` provides a common way of labelling an error as a

NotExist error and we can/should use this.

This PR has chosen to use the common `io/fs` errors e.g.

`fs.ErrNotExist` for our errors. This is in some ways not completely

correct as these are not filesystem errors but it seems like a

reasonable thing to do and would allow us to simplify a lot of our code

to `errors.Is(err, fs.ErrNotExist)` instead of

`package.IsErr...NotExist(err)`

I am open to suggestions to use a different base error - perhaps

`models/db.ErrNotExist` if that would be felt to be better.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

depends on #18871

Added some api integration tests to help testing of #18798.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Related:

* #21362

This PR uses a general and stable method to generate resource index (eg:

Issue Index, PR Index)

If the code looks good, I can add more tests

ps: please skip the diff, only have a look at the new code. It's

entirely re-written.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

For security reasons, all e-mail addresses starting with

non-alphanumeric characters were rejected. This is too broad and rejects

perfectly valid e-mail addresses. Only leading hyphens should be

rejected -- in all other cases e-mail address specification should

follow RFC 5322.

Co-authored-by: Andreas Fischer <_@ndreas.de>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

- Currently `repository.Num{Issues,Pulls}` weren't checked and could

become out-of-consistency. Adds these two checks to `CheckRepoStats`.

- Fix incorrect SQL query for `repository.NumClosedPulls`, the check

should be for `repo_num_pulls`.

- Reference: https://codeberg.org/Codeberg/Community/issues/696

Adds the settings pages to create OAuth2 apps also to the org settings

and allows to create apps for orgs.

Refactoring: the oauth2 related templates are shared for

instance-wide/org/user, and the backend code uses `OAuth2CommonHandlers`

to share code for instance-wide/org/user.

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fixes#21250

Related #20414

Conan packages don't have to follow SemVer.

The migration fixes the setting for all existing Conan and Generic

(#20414) packages.

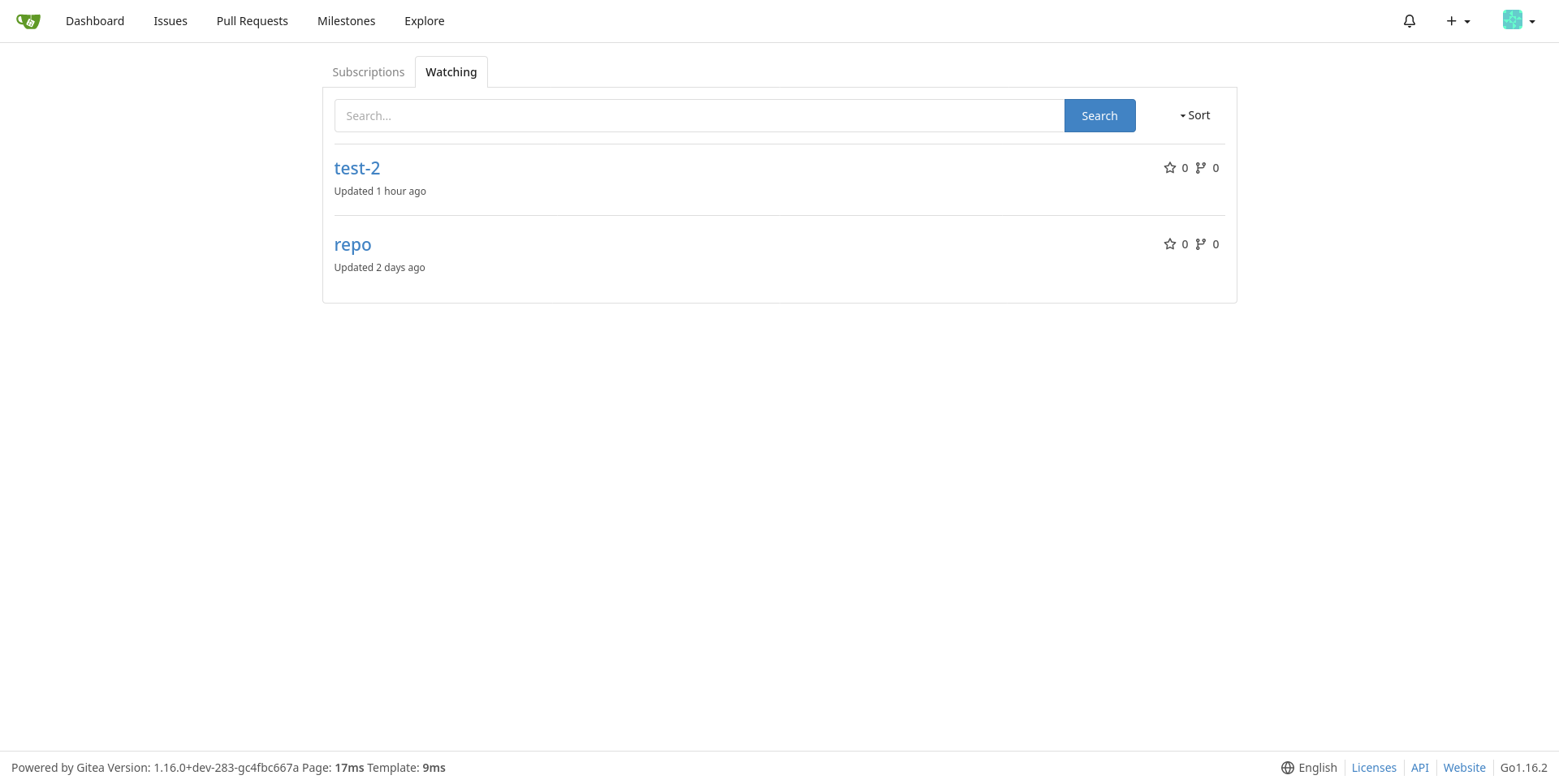

Adds GitHub-like pages to view watched repos and subscribed issues/PRs

This is my second try to fix this, but it is better than the first since

it doesn't uses a filter option which could be slow when accessing

`/issues` or `/pulls` and it shows both pulls and issues (the first try

is #17053).

Closes#16111

Replaces and closes#17053

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

This adds an api endpoint `/files` to PRs that allows to get a list of changed files.

built upon #18228, reviews there are included

closes https://github.com/go-gitea/gitea/issues/654

Co-authored-by: Anton Bracke <anton@ju60.de>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fixes#21206

If user and viewer are equal the method should return true.

Also the common organization check was wrong as `count` can never be

less then 0.

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

There is a mistake in the batched delete comments part of DeleteUser which causes some comments to not be deleted

The code incorrectly updates the `start` of the limit clause resulting in most comments not being deleted.

```go

if err = e.Where("type=? AND poster_id=?", issues_model.CommentTypeComment, u.ID).Limit(batchSize, start).Find(&comments); err != nil {

```

should be:

```go

if err = e.Where("type=? AND poster_id=?", issues_model.CommentTypeComment, u.ID).Limit(batchSize, 0).Find(&comments); err != nil {

```

Co-authored-by: zeripath <art27@cantab.net>

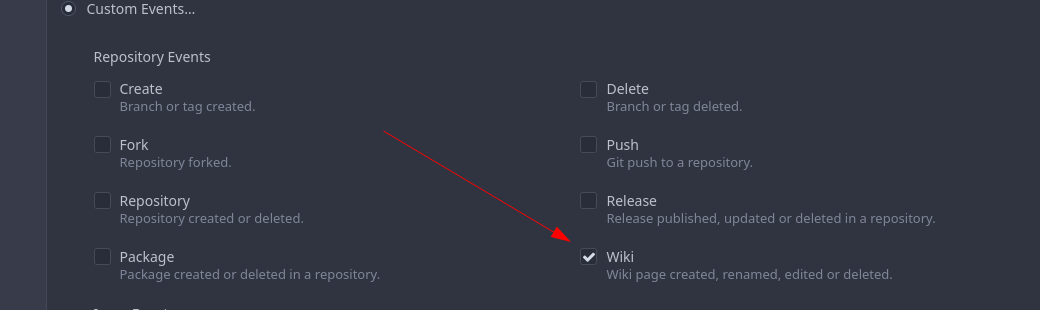

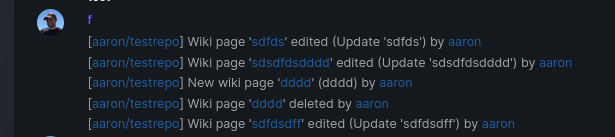

Add support for triggering webhook notifications on wiki changes.

This PR contains frontend and backend for webhook notifications on wiki actions (create a new page, rename a page, edit a page and delete a page). The frontend got a new checkbox under the Custom Event -> Repository Events section. There is only one checkbox for create/edit/rename/delete actions, because it makes no sense to separate it and others like releases or packages follow the same schema.

The actions itself are separated, so that different notifications will be executed (with the "action" field). All the webhook receivers implement the new interface method (Wiki) and the corresponding tests.

When implementing this, I encounter a little bug on editing a wiki page. Creating and editing a wiki page is technically the same action and will be handled by the ```updateWikiPage``` function. But the function need to know if it is a new wiki page or just a change. This distinction is done by the ```action``` parameter, but this will not be sent by the frontend (on form submit). This PR will fix this by adding the ```action``` parameter with the values ```_new``` or ```_edit```, which will be used by the ```updateWikiPage``` function.

I've done integration tests with matrix and gitea (http).

Fix#16457

Signed-off-by: Aaron Fischer <mail@aaron-fischer.net>

A testing cleanup.

This pull request replaces `os.MkdirTemp` with `t.TempDir`. We can use the `T.TempDir` function from the `testing` package to create temporary directory. The directory created by `T.TempDir` is automatically removed when the test and all its subtests complete.

This saves us at least 2 lines (error check, and cleanup) on every instance, or in some cases adds cleanup that we forgot.

Reference: https://pkg.go.dev/testing#T.TempDir

```go

func TestFoo(t *testing.T) {

// before

tmpDir, err := os.MkdirTemp("", "")

require.NoError(t, err)

defer os.RemoveAll(tmpDir)

// now

tmpDir := t.TempDir()

}

```

Signed-off-by: Eng Zer Jun <engzerjun@gmail.com>

When migrating add several more important sanity checks:

* SHAs must be SHAs

* Refs must be valid Refs

* URLs must be reasonable

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <matti@mdranta.net>

In #21031 we have discovered that on very big tables postgres will use a

search involving the sort term in preference to the restrictive index.

Therefore we add another index for postgres and update the original migration.

Fix#21031

Signed-off-by: Andrew Thornton <art27@cantab.net>

* fix hard-coded timeout and error panic in API archive download endpoint

This commit updates the `GET /api/v1/repos/{owner}/{repo}/archive/{archive}`

endpoint which prior to this PR had a couple of issues.

1. The endpoint had a hard-coded 20s timeout for the archiver to complete after

which a 500 (Internal Server Error) was returned to client. For a scripted

API client there was no clear way of telling that the operation timed out and

that it should retry.

2. Whenever the timeout _did occur_, the code used to panic. This was caused by

the API endpoint "delegating" to the same call path as the web, which uses a

slightly different way of reporting errors (HTML rather than JSON for

example).

More specifically, `api/v1/repo/file.go#GetArchive` just called through to

`web/repo/repo.go#Download`, which expects the `Context` to have a `Render`

field set, but which is `nil` for API calls. Hence, a `nil` pointer error.

The code addresses (1) by dropping the hard-coded timeout. Instead, any

timeout/cancelation on the incoming `Context` is used.

The code addresses (2) by updating the API endpoint to use a separate call path

for the API-triggered archive download. This avoids producing HTML-errors on

errors (it now produces JSON errors).

Signed-off-by: Peter Gardfjäll <peter.gardfjall.work@gmail.com>

Adds a new option to only show relevant repo's on the explore page, for bigger Gitea instances like Codeberg this is a nice option to enable to make the explore page more populated with unique and "high" quality repo's. A note is shown that the results are filtered and have the possibility to see the unfiltered results.

Co-authored-by: vednoc <vednoc@protonmail.com>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: 6543 <6543@obermui.de>

Unfortunately some keys are too big to fix within the 65535 limit of TEXT on MySQL

this causes issues with these large keys.

Therefore increase these fields to MEDIUMTEXT.

Fix#20894

Signed-off-by: Andrew Thornton <art27@cantab.net>

- Currently the function takes in the `UserID` option, but isn't being

used within the SQL query. This patch fixes that by checking that only

teams are being returned that the user belongs to.

Fix#20829

Co-authored-by: delvh <dev.lh@web.de>

Whilst looking at #20840 I noticed that the Mirrors data doesn't appear

to be being used therefore we can remove this and in fact none of the

related code is used elsewhere so it can also be removed.

Related #20840

Related #20804

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

In MirrorRepositoryList.loadAttributes there is some code to load the Mirror entries

from the database. This assumes that every Repository which has IsMirror set has

a Mirror associated in the DB. This association is incorrect in the case of

Mirror repository under creation when there is no Mirror entry in the DB until

completion.

Unfortunately LoadAttributes makes this incorrect assumption and presumes that a

Mirror will always be loaded. This then causes a panic.

This PR simply double checks if there a Mirror before attempting to link back to

its Repo. Unfortunately it should be expected that there may be other cases where

this incorrect assumption causes further problems.

Fix#20804

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* merge `CheckLFSVersion` into `InitFull` (renamed from `InitWithSyncOnce`)

* remove the `Once` during git init, no data-race now

* for doctor sub-commands, `InitFull` should only be called in initialization stage

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* Added support for Pub packages.

* Update docs/content/doc/packages/overview.en-us.md

Co-authored-by: Gergely Nagy <algernon@users.noreply.github.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Gergely Nagy <algernon@users.noreply.github.com>

Co-authored-by: Lauris BH <lauris@nix.lv>

- Add a new push mirror to specific repository

- Sync now ( send all the changes to the configured push mirrors )

- Get list of all push mirrors of a repository

- Get a push mirror by ID

- Delete push mirror by ID

Signed-off-by: Mohamed Sekour <mohamed.sekour@exfo.com>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: zeripath <art27@cantab.net>

WebAuthn have updated their specification to set the maximum size of the

CredentialID to 1023 bytes. This is somewhat larger than our current

size and therefore we need to migrate.

The PR changes the struct to add CredentialIDBytes and migrates the CredentialID string

to the bytes field before another migration drops the old CredentialID field. Another migration

renames this field back.

Fix#20457

Signed-off-by: Andrew Thornton <art27@cantab.net>

Sometimes users want to receive email notifications of messages they create or reply to,

Added an option to personal preferences to allow users to choose

Closes#20149

Was looking into the visibility checks because I need them for something different and noticed the checks are more complicated than they have to be.

The rule is just: user/org is visible if

- The doer is a member of the org, regardless of the org visibility

- The doer is not restricted and the user/org is public or limited

When viewing a subdirectory and the latest commit to that directory in

the table, the commit status icon incorrectly showed the status of the

HEAD commit instead of the latest for that directory.

* Fixes issue #19603 (Not able to merge commit in PR when branches content is same, but different commit id)

* fill HeadCommitID in PullRequest

* compare real commits ID as check for merging

* based on @zeripath patch in #19738

- Currently when a Team has read access to a organization's non-private

repository, their access won't be stored in the database. This caused

issue for code that rely on read access being stored. So from now-on if

we see that the repository is owned by a organization don't increase the

minMode to write permission.

- Resolves#20083

Support synchronizing with the push mirrors whenever new commits are pushed or synced from pull mirror.

Related Issues: #18220

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* Add git.HOME_PATH

* add legacy file check

* Apply suggestions from code review

Co-authored-by: zeripath <art27@cantab.net>

* pass env GNUPGHOME to git command, move the existing .gitconfig to new home, make the fix for 1.17rc more clear.

* set git.HOME_PATH for docker images to default HOME

* Revert "set git.HOME_PATH for docker images to default HOME"

This reverts commit f120101ddc.

* force Gitea to use a stable GNUPGHOME directory

* extra check to ensure only process dir or symlink for legacy files

* refactor variable name

* The legacy dir check (for 1.17-rc1) could be removed with 1.18 release, since users should have upgraded from 1.17-rc to 1.17-stable

* Update modules/git/git.go

Co-authored-by: Steven Kriegler <61625851+justusbunsi@users.noreply.github.com>

* remove initFixGitHome117rc

* Update git.go

* Update docs/content/doc/advanced/config-cheat-sheet.en-us.md

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Steven Kriegler <61625851+justusbunsi@users.noreply.github.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Users who are following or being followed by a user should only be

displayed if the viewing user can see them.

Signed-off-by: Andrew Thornton <art27@cantab.net>

The setting `DEFAULT_SHOW_FULL_NAME` promises to use the user's full name everywhere it can be used.

Unfortunately the function `*user_model.User.ShortName()` currently uses the `.Name` instead - but this should also use the `.FullName()`.

Therefore we should make `*user_model.User.ShortName()` base its pre-shortened name on the `.FullName()` function.

Unforunately the previous PR #20035 created indices that were not helpful

for SQLite. This PR adjusts these after testing using the try.gitea.io db.

Fix#20129

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Check if project has the same repository id with issue when assign project to issue

* Check if issue's repository id match project's repository id

* Add more permission checking

* Remove invalid argument

* Fix errors

* Add generic check

* Remove duplicated check

* Return error + add check for new issues

* Apply suggestions from code review

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: 6543 <6543@obermui.de>

This PR adds a new manager command to switch on SQL logging and to turn it off.

```

gitea manager logging log-sql

gitea manager logging log-sql --off

```

Signed-off-by: Andrew Thornton <art27@cantab.net>

There appears to be a strange bug whereby the comment_id index can sometimes be missed

or missing from the action table despite the sync2 that should create it in the earlier

part of this migration. However, looking through the code for Sync2 there is no need

for this pre-code to exist and Sync2 should drop/create the indices as necessary.

I think therefore we should simplify the migration to simply be Sync2.

Signed-off-by: Andrew Thornton <art27@cantab.net>

* go.mod: add go-fed/{httpsig,activity/pub,activity/streams} dependency

go get github.com/go-fed/activity/streams@master

go get github.com/go-fed/activity/pub@master

go get github.com/go-fed/httpsig@master

* activitypub: implement /api/v1/activitypub/user/{username} (#14186)

Return informations regarding a Person (as defined in ActivityStreams

https://www.w3.org/TR/activitystreams-vocabulary/#dfn-person).

Refs: https://github.com/go-gitea/gitea/issues/14186

Signed-off-by: Loïc Dachary <loic@dachary.org>

* activitypub: add the public key to Person (#14186)

Refs: https://github.com/go-gitea/gitea/issues/14186

Signed-off-by: Loïc Dachary <loic@dachary.org>

* activitypub: go-fed conformant Clock instance

Signed-off-by: Loïc Dachary <loic@dachary.org>

* activitypub: signing http client

Signed-off-by: Loïc Dachary <loic@dachary.org>

* activitypub: implement the ReqSignature middleware

Signed-off-by: Loïc Dachary <loic@dachary.org>

* activitypub: hack_16834

Signed-off-by: Loïc Dachary <loic@dachary.org>

* Fix CI checks-backend errors with go mod tidy

Signed-off-by: Anthony Wang <ta180m@pm.me>

* Change 2021 to 2022, properly format package imports

Signed-off-by: Anthony Wang <ta180m@pm.me>

* Run make fmt and make generate-swagger

Signed-off-by: Anthony Wang <ta180m@pm.me>

* Use Gitea JSON library, add assert for pkp

Signed-off-by: Anthony Wang <ta180m@pm.me>

* Run make fmt again, fix err var redeclaration

Signed-off-by: Anthony Wang <ta180m@pm.me>

* Remove LogSQL from ActivityPub person test

Signed-off-by: Anthony Wang <ta180m@pm.me>

* Assert if json.Unmarshal succeeds

Signed-off-by: Anthony Wang <ta180m@pm.me>

* Cleanup, handle invalid usernames for ActivityPub person GET request

Signed-off-by: Anthony Wang <ta180m@pm.me>

* Rename hack_16834 to user_settings

Signed-off-by: Anthony Wang <ta180m@pm.me>

* Use the httplib module instead of http for GET requests

* Clean up whitespace with make fmt

* Use time.RFC1123 and make the http.Client proxy-aware

* Check if digest algo is supported in setting module

* Clean up some variable declarations

* Remove unneeded copy

* Use system timezone instead of setting.DefaultUILocation

* Use named constant for httpsigExpirationTime

* Make pubKey IRI #main-key instead of /#main-key

* Move /#main-key to #main-key in tests

* Implemented Webfinger endpoint.

* Add visible check.

* Add user profile as alias.

* Add actor IRI and remote interaction URL to WebFinger response

* fmt

* Fix lint errors

* Use go-ap instead of go-fed

* Run go mod tidy to fix missing modules in go.mod and go.sum

* make fmt

* Convert remaining code to go-ap

* Clean up go.sum

* Fix JSON unmarshall error

* Fix CI errors by adding @context to Person() and making sure types match

* Correctly decode JSON in api_activitypub_person_test.go

* Force CI rerun

* Fix TestActivityPubPersonInbox segfault

* Fix lint error

* Use @mariusor's suggestions for idiomatic go-ap usage

* Correctly add inbox/outbox IRIs to person

* Code cleanup

* Remove another LogSQL from ActivityPub person test

* Move httpsig algos slice to an init() function

* Add actor IRI and remote interaction URL to WebFinger response

* Update TestWebFinger to check for ActivityPub IRI in aliases

* make fmt

* Force CI rerun

* WebFinger: Add CORS header and fix Href -> Template for remote interactions

The CORS header is needed due to https://datatracker.ietf.org/doc/html/rfc7033#section-5 and fixes some Peertube <-> Gitea federation issues

* make lint-backend

* Make sure Person endpoint has Content-Type application/activity+json and includes PreferredUsername, URL, and Icon

Setting the correct Content-Type is essential for federating with Mastodon

* Use UTC instead of GMT

* Rename pkey to pubKey

* Make sure HTTP request Date in GMT

* make fmt

* dont drop err

* Make sure API responses always refer to username in original case

Copied from what I wrote on #19133 discussion: Handling username case is a very tricky issue and I've already encountered a Mastodon <-> Gitea federation bug due to Gitea considering Ta180m and ta180m to be the same user while Mastodon thinks they are two different users. I think the best way forward is for Gitea to only use the original case version of the username for federation so other AP software don't get confused.

* Move httpsig algs constant slice to modules/setting/federation.go

* Add new federation settings to app.example.ini and config-cheat-sheet

* Return if marshalling error

* Make sure Person IRIs are generated correctly

This commit ensures that if the setting.AppURL is something like "http://127.0.0.1:42567" (like in the integration tests), a trailing slash will be added after that URL.

* If httpsig verification fails, fix Host header and try again

This fixes a very rare bug when Gitea and another AP server (confirmed to happen with Mastodon) are running on the same machine, Gitea fails to verify incoming HTTP signatures. This is because the other AP server creates the sig with the public Gitea domain as the Host. However, when Gitea receives the request, the Host header is instead localhost, so the signature verification fails. Manually changing the host header to the correct value and trying the veification again fixes the bug.

* Revert "If httpsig verification fails, fix Host header and try again"

This reverts commit f53e46c721.

The bug was actually caused by nginx messing up the Host header when reverse-proxying since I didn't have the line `proxy_set_header Host $host;` in my nginx config for Gitea.

* Go back to using ap.IRI to generate inbox and outbox IRIs

* use const for key values

* Update routers/web/webfinger.go

* Use ctx.JSON in Person response to make code cleaner

* Revert "Use ctx.JSON in Person response to make code cleaner"

This doesn't work because the ctx.JSON() function already sends the response out and it's too late to edit the headers.

This reverts commit 95aad98897.

* Use activitypub.ActivityStreamsContentType for Person response Content Type

* Limit maximum ActivityPub request and response sizes to a configurable setting

* Move setting key constants to models/user/setting_keys.go

* Fix failing ActivityPubPerson integration test by checking the correct field for username

* Add a warning about changing settings that can break federation

* Add better comments

* Don't multiply Federation.MaxSize by 1<<20 twice

* Add more better comments

* Fix failing ActivityPubMissingPerson test

We now use ctx.ContextUser so the message printed out when a user does not exist is slightly different

* make generate-swagger

For some reason I didn't realize that /templates/swagger/v1_json.tmpl was machine-generated by make generate-swagger... I've been editing it by hand for three months! 🤦

* Move getting the RFC 2616 time to a separate function

* More code cleanup

* Update go-ap to fix empty liked collection and removed unneeded HTTP headers

* go mod tidy

* Add ed25519 to httpsig algorithms

* Use go-ap/jsonld to add @context and marshal JSON

* Change Gitea user agent from the default to Gitea/Version

* Use ctx.ServerError and remove all remote interaction code from webfinger.go

gitea doctor --run check-db-consistency is currently broken due to an incorrect

and old use of Count() with a string.

Signed-off-by: Andrew Thornton <art27@cantab.net>

* clean git support for ver < 2.0

* fine tune tests for markup (which requires git module)

* remove unnecessary comments

* try to fix tests

* try test again

* use const for GitVersionRequired instead of var

* try to fix integration test

* Refactor CheckAttributeReader to make a *git.Repository version

* update document for commit signing with Gitea's internal gitconfig

* update document for commit signing with Gitea's internal gitconfig

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

- Don't specify the field in `Count` instead use `Cols` for this.

- Call `log.Error` when a error occur.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* When non-admin users use code search, get code unit accessible repos in one main query

* Modified some comments to match the changes

* Removed unnecessary check for Access Mode in Collaboration table

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lauris BH <lauris@nix.lv>

* Move access and repo permission to models/perm/access

* fix test

* fix git test

* Move functions sequence

* Some improvements per @KN4CK3R and @delvh

* Move issues related code to models/issues

* Move some issues related sub package

* Merge

* Fix test

* Fix test

* Fix test

* Fix test

* Rename some files

* Move access and repo permission to models/perm/access

* fix test

* Move some git related files into sub package models/git

* Fix build

* fix git test

* move lfs to sub package

* move more git related functions to models/git

* Move functions sequence

* Some improvements per @KN4CK3R and @delvh

* Move some repository related code into sub package

* Move more repository functions out of models

* Fix lint

* Some performance optimization for webhooks and others

* some refactors

* Fix lint

* Fix

* Update modules/repository/delete.go

Co-authored-by: delvh <dev.lh@web.de>

* Fix test

* Merge

* Fix test

* Fix test

* Fix test

* Fix test

Co-authored-by: delvh <dev.lh@web.de>

Upgrade builder to v0.3.11

Upgrade xorm to v1.3.1 and fixed some hidden bugs.

Replace #19821

Replace #19834

Included #19850

Co-authored-by: zeripath <art27@cantab.net>

Milestones in archived repos should not be displayed on `/milestones`. Therefore

we should exclude these repositories from milestones page.

Fix#18257

Signed-off-by: Andrew Thornton <art27@cantab.net>

Looking through the logs of try.gitea.io I am seeing a number of reports

of being unable to APIformat stopwatches because the issueID is 0. These

are invalid StopWatches and they represent a db inconsistency.

This PR simply stops sending them to the eventsource.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

The issue was that only the actual title was converted to uppercase, but

not the prefix as specified in `WORK_IN_PROGRESS_PREFIXES`. As a result,

the following did not work:

WORK_IN_PROGRESS_PREFIXES=Draft:,[Draft],WIP:,[WIP]

One possible workaround was:

WORK_IN_PROGRESS_PREFIXES=DRAFT:,[DRAFT],WIP:,[WIP]

Then indeed one could use `Draft` (as well as `DRAFT`) in the title.

However, the link `Start the title with DRAFT: to prevent the pull request

from being merged accidentally.` showed the suggestion in uppercase; so

it is not possible to show it as `Draft`. This PR fixes it, and allows

to use `Draft` in `WORK_IN_PROGRESS_PREFIXES`.

Fixes#19779.

Co-authored-by: zeripath <art27@cantab.net>

Add ability to show source/target branches for Pull Request's list. It can be useful to see which branches are used in each PR right in the list.

Co-authored-by: Alexey Korobkov <akorobkov@cian.ru>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lauris BH <lauris@nix.lv>

* Allows repo search to match against "owner/repo" pattern strings

* Gofumpt

* Adds test case for "owner/repo" style repo search

* With "owner/repo" search terms, prioritise results which match the owner field

* Fixes unquoted SQL string in repo search

* Makes comments in body text/title return the base page URL instead of "" in RefCommentHTMLURL()

* Add comment explaining branch

Co-authored-by: delvh <dev.lh@web.de>

- Don't use hacky solution to limit to the correct RepoID's, instead use

current code to handle these limits. The existing code is more correct

than the hacky solution.

- Resolves#19636

- Add test-case

- Don't log the reflect struct, but instead log the ID of the struct.

This improves the error message, as you would actually know which row is

the error.

* Fix indention

Signed-off-by: kolaente <k@knt.li>

* Add option to merge a pr right now without waiting for the checks to succeed

Signed-off-by: kolaente <k@knt.li>

* Fix lint

Signed-off-by: kolaente <k@knt.li>

* Add scheduled pr merge to tables used for testing

Signed-off-by: kolaente <k@knt.li>

* Add status param to make GetPullRequestByHeadBranch reusable

Signed-off-by: kolaente <k@knt.li>

* Move "Merge now" to a seperate button to make the ui clearer

Signed-off-by: kolaente <k@knt.li>

* Update models/scheduled_pull_request_merge.go

Co-authored-by: 赵智超 <1012112796@qq.com>

* Update web_src/js/index.js

Co-authored-by: 赵智超 <1012112796@qq.com>

* Update web_src/js/index.js

Co-authored-by: 赵智超 <1012112796@qq.com>

* Re-add migration after merge

* Fix frontend lint

* Fix version compare

* Add vendored dependencies

* Add basic tets

* Make sure the api route is capable of scheduling PRs for merging

* Fix comparing version

* make vendor

* adopt refactor

* apply suggestion: User -> Doer

* init var once

* Fix Test

* Update templates/repo/issue/view_content/comments.tmpl

* adopt

* nits

* next

* code format

* lint

* use same name schema; rm CreateUnScheduledPRToAutoMergeComment

* API: can not create schedule twice

* Add TestGetBranchNamesForSha

* nits

* new go routine for each pull to merge

* Update models/pull.go

Co-authored-by: a1012112796 <1012112796@qq.com>

* Update models/scheduled_pull_request_merge.go

Co-authored-by: a1012112796 <1012112796@qq.com>

* fix & add renaming sugestions

* Update services/automerge/pull_auto_merge.go

Co-authored-by: a1012112796 <1012112796@qq.com>

* fix conflict relicts

* apply latest refactors

* fix: migration after merge

* Update models/error.go

Co-authored-by: delvh <dev.lh@web.de>

* Update options/locale/locale_en-US.ini

Co-authored-by: delvh <dev.lh@web.de>

* Update options/locale/locale_en-US.ini

Co-authored-by: delvh <dev.lh@web.de>

* adapt latest refactors

* fix test

* use more context

* skip potential edgecases

* document func usage

* GetBranchNamesForSha() -> GetRefsBySha()

* start refactoring

* ajust to new changes

* nit

* docu nit

* the great check move

* move checks for branchprotection into own package

* resolve todo now ...

* move & rename

* unexport if posible

* fix

* check if merge is allowed before merge on scheduled pull

* debugg

* wording

* improve SetDefaults & nits

* NotAllowedToMerge -> DisallowedToMerge

* fix test

* merge files

* use package "errors"

* merge files

* add string names

* other implementation for gogit

* adapt refactor

* more context for models/pull.go

* GetUserRepoPermission use context

* more ctx

* use context for loading pull head/base-repo

* more ctx

* more ctx

* models.LoadIssueCtx()

* models.LoadIssueCtx()

* Handle pull_service.Merge in one DB transaction

* add TODOs

* next

* next

* next

* more ctx

* more ctx

* Start refactoring structure of old pull code ...

* move code into new packages

* shorter names ... and finish **restructure**

* Update models/branches.go

Co-authored-by: zeripath <art27@cantab.net>

* finish UpdateProtectBranch

* more and fix

* update datum

* template: use "svg" helper

* rename prQueue 2 prPatchCheckerQueue

* handle automerge in queue

* lock pull on git&db actions ...

* lock pull on git&db actions ...

* add TODO notes

* the regex

* transaction in tests

* GetRepositoryByIDCtx

* shorter table name and lint fix

* close transaction bevore notify

* Update models/pull.go

* next

* CheckPullMergable check all branch protections!

* Update routers/web/repo/pull.go

* CheckPullMergable check all branch protections!

* Revert "PullService lock via pullID (#19520)" (for now...)

This reverts commit 6cde7c9159a5ea75a10356feb7b8c7ad4c434a9a.

* Update services/pull/check.go

* Use for a repo action one database transaction

* Apply suggestions from code review

* Apply suggestions from code review

Co-authored-by: delvh <dev.lh@web.de>

* Update services/issue/status.go

Co-authored-by: delvh <dev.lh@web.de>

* Update services/issue/status.go

Co-authored-by: delvh <dev.lh@web.de>

* use db.WithTx()

* gofmt

* make pr.GetDefaultMergeMessage() context aware

* make MergePullRequestForm.SetDefaults context aware

* use db.WithTx()

* pull.SetMerged only with context

* fix deadlock in `test-sqlite\#TestAPIBranchProtection`

* dont forget templates

* db.WithTx allow to set the parentCtx

* handle db transaction in service packages but not router

* issue_service.ChangeStatus just had caused another deadlock :/

it has to do something with how notification package is handled

* if we merge a pull in one database transaktion, we get a lock, because merge infoce internal api that cant handle open db sessions to the same repo

* ajust to current master

* Apply suggestions from code review

Co-authored-by: delvh <dev.lh@web.de>

* dont open db transaction in router

* make generate-swagger

* one _success less

* wording nit

* rm

* adapt

* remove not needed test files

* rm less diff & use attr in JS

* ...

* Update services/repository/files/commit.go

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

* ajust db schema for PullAutoMerge

* skip broken pull refs

* more context in error messages

* remove webUI part for another pull

* remove more WebUI only parts

* API: add CancleAutoMergePR

* Apply suggestions from code review

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

* fix lint

* Apply suggestions from code review

* cancle -> cancel

Co-authored-by: delvh <dev.lh@web.de>

* change queue identifyer

* fix swagger

* prevent nil issue

* fix and dont drop error

* as per @zeripath

* Update integrations/git_test.go

Co-authored-by: delvh <dev.lh@web.de>

* Update integrations/git_test.go

Co-authored-by: delvh <dev.lh@web.de>

* more declarative integration tests (dedup code)

* use assert.False/True helper

Co-authored-by: 赵智超 <1012112796@qq.com>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

* GetFeeds must always discard actions with dangling repo_id

See https://discourse.gitea.io/t/blank-page-after-login/5051/12

for a panic in 1.16.6.

* add comment to explain the dangling ID in the fixture

* loadRepoOwner must not attempt to use a nil action.Repo

* make fmt

Co-authored-by: Loïc Dachary <loic@dachary.org>

* Only check for non-finished migrating task

- Only check if a non-finished migrating task exists for a mirror before

fetching the mirror details from the database.

- Resolves#19600

- Regression: #19588

* Clarify function

- When a repository is still being migrated, don't try to fetch the

Mirror from the database. Instead skip it. This allows to visit

repositories that are still being migrated and were configured to be

mirrored.

- Resolves#19585

- Regression: #19295

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

* Apply DefaultUserIsRestricted in CreateUser

* Enforce system defaults in CreateUser

Allow for overwrites with CreateUserOverwriteOptions

* Fix compilation errors

* Add "restricted" option to create user command

* Add "restricted" option to create user admin api

* Respect default setting.Service.RegisterEmailConfirm and setting.Service.RegisterManualConfirm where needed

* Revert "Respect default setting.Service.RegisterEmailConfirm and setting.Service.RegisterManualConfirm where needed"

This reverts commit ee95d3e8dc.

Targeting #14936, #15332

Adds a collaborator permissions API endpoint according to GitHub API: https://docs.github.com/en/rest/collaborators/collaborators#get-repository-permissions-for-a-user to retrieve a collaborators permissions for a specific repository.

### Checks the repository permissions of a collaborator.

`GET` `/repos/{owner}/{repo}/collaborators/{collaborator}/permission`

Possible `permission` values are `admin`, `write`, `read`, `owner`, `none`.

```json

{

"permission": "admin",

"role_name": "admin",

"user": {}

}

```

Where `permission` and `role_name` hold the same `permission` value and `user` is filled with the user API object. Only admins are allowed to use this API endpoint.

Adds a feature [like GitHub has](https://docs.github.com/en/pull-requests/collaborating-with-pull-requests/proposing-changes-to-your-work-with-pull-requests/creating-a-pull-request-from-a-fork) (step 7).

If you create a new PR from a forked repo, you can select (and change later, but only if you are the PR creator/poster) the "Allow edits from maintainers" option.

Then users with write access to the base branch get more permissions on this branch:

* use the update pull request button

* push directly from the command line (`git push`)

* edit/delete/upload files via web UI

* use related API endpoints

You can't merge PRs to this branch with this enabled, you'll need "full" code write permissions.

This feature has a pretty big impact on the permission system. I might forgot changing some things or didn't find security vulnerabilities. In this case, please leave a review or comment on this PR.

Closes#17728

Co-authored-by: 6543 <6543@obermui.de>

* Set correct PR status on 3way on conflict checking

- When 3-way merge is enabled for conflict checking, it has a new

interesting behavior that it doesn't return any error when it found a

conflict, so we change the condition to not check for the error, but

instead check if conflictedfiles is populated, this fixes a issue

whereby PR status wasn't correctly on conflicted PR's.

- Refactor the mergeable property(which was incorrectly set and lead me this

bug) to be more maintainable.

- Add a dedicated test for conflicting checking, so it should prevent

future issues with this.

* Fix linter

* remove error who is none

* use setupSessionNoLimit instead of setupSessionWithLimit when no pagination

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Follows: #19284

* The `CopyDir` is only used inside test code

* Rewrite `ToSnakeCase` with more test cases

* The `RedisCacher` only put strings into cache, here we use internal `toStr` to replace the legacy `ToStr`

* The `UniqueQueue` can use string as ID directly, no need to call `ToStr`

The main purpose is to refactor the legacy `unknwon/com` package.

1. Remove most imports of `unknwon/com`, only `util/legacy.go` imports the legacy `unknwon/com`

2. Use golangci's depguard to process denied packages

3. Fix some incorrect values in golangci.yml, eg, the version should be quoted string `"1.18"`

4. Use correctly escaped content for `go-import` and `go-source` meta tags

5. Refactor `com.Expand` to our stable (and the same fast) `vars.Expand`, our `vars.Expand` can still return partially rendered content even if the template is not good (eg: key mistach).

Follows #19266, #8553, Close#18553, now there are only three `Run..(&RunOpts{})` functions.

* before: `stdout, err := RunInDir(path)`

* now: `stdout, _, err := RunStdString(&git.RunOpts{Dir:path})`

This make checks in one single place so they dont differ and maintainer can not forget a check in one place while adding it to the other .... ( as it's atm )

Fix:

* The API does ignore issue dependencies where Web does not

* The API checks if "IsSignedIfRequired" where Web does not - UI probably do but nothing will some to craft custom requests

* Default merge message is crafted a bit different between API and Web if not set on specific cases ...

Adding additional usernames which are already routes, remove unused ones.

In future, avoid reserving names as much as possible, use `/-/` in path instead.

There is yet another problem with conflicted files not being reset when

the test patch resolves them.

This PR adjusts the code for checkConflicts to reset the ConflictedFiles

field immediately at the top. It also adds a reset to conflictedFiles

for the manuallyMerged and a shortcut for the empty status in

protectedfiles.

Signed-off-by: Andrew Thornton <art27@cantab.net>

The last PR about clone buttons introduced an JS error when visiting an empty repo page:

* https://github.com/go-gitea/gitea/pull/19028

* `Uncaught ReferenceError: isSSH is not defined`, because the variables are scoped and doesn't share between sub templates.

This:

1. Simplify `templates/repo/clone_buttons.tmpl` and make code clear

2. Move most JS code into `initRepoCloneLink`

3. Remove unused `CloneLink.Git`

4. Remove `ctx.Data["DisableSSH"] / ctx.Data["ExposeAnonSSH"] / ctx.Data["DisableHTTP"]`, and only set them when is is needed (eg: deploy keys / ssh keys)

5. Introduce `Data["CloneButton*"]` to provide data for clone buttons and links

6. Introduce `Data["RepoCloneLink"]` for the repo clone link (not the wiki)

7. Remove most `ctx.Data["PageIsWiki"]` because it has been set in the `/wiki` middleware

8. Remove incorrect `quickstart` class in `migrating.tmpl`

There is a bug in the system webhooks whereby the active state is not checked when

webhooks are prepared and there is a bug that deactivating webhooks do not prevent

queued deliveries.

* Only add SystemWebhooks to the prepareWebhooks list if they are active

* At the time of delivery if the underlying webhook is not active mark it

as "delivered" but with a failed delivery so it does not get delivered.

Fix#19220

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* Touch mirrors on even on fail to update

If a mirror fails to be synchronised it should be pushed to the bottom of the queue

of the awaiting mirrors to be synchronised. At present if there LIMIT number of

broken mirrors they can effectively prevent all other mirrors from being synchronized

as their last_updated time will remain earlier than other mirrors.

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Set the default branch for repositories generated from templates

* Allows default branch to be set through the API for repos generated from templates

* Update swagger API template

* Only set default branch to the one from the template if not specified

* Use specified default branch if it exists while generating git commits

Fix#19082

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Co-authored-by: zeripath <art27@cantab.net>

- Make a restriction on which issues can be shown based on if you the user or team has write permission to the repository.

- Fixes a issue whereby you wouldn't see any associated issues with a specific team on a organization if you wasn't a member(fixed by zeroing the User{ID} in the options).

- Resolves#18913

* Clean up protected_branches when deleting user

fixes#19094

* Clean up protected_branches when deleting teams

* fix issue

Co-authored-by: Lauris BH <lauris@nix.lv>

Storing the foreign identifier of an imported issue in the database is a prerequisite to implement idempotent migrations or mirror for issues. It is a baby step towards mirroring that introduces a new table.

At the moment when an issue is created by the Gitea uploader, it fails if the issue already exists. The Gitea uploader could be modified so that, instead of failing, it looks up the database to find an existing issue. And if it does it would update the issue instead of creating a new one. However this is not currently possible because an information is missing from the database: the foreign identifier that uniquely represents the issue being migrated is not persisted. With this change, the foreign identifier is stored in the database and the Gitea uploader will then be able to run a query to figure out if a given issue being imported already exists.

The implementation of mirroring for issues, pull requests, releases, etc. can be done in three steps:

1. Store an identifier for the element being mirrored (issue, pull request...) in the database (this is the purpose of these changes)

2. Modify the Gitea uploader to be able to update an existing repository with all it contains (issues, pull request...) instead of failing if it exists

3. Optimize the Gitea uploader to speed up the updates, when possible.

The second step creates code that does not yet exist to enable idempotent migrations with the Gitea uploader. When a migration is done for the first time, the behavior is not changed. But when a migration is done for a repository that already exists, this new code is used to update it.

The third step can use the code created in the second step to optimize and speed up migrations. For instance, when a migration is resumed, an issue that has an update time that is not more recent can be skipped and only newly created issues or updated ones will be updated. Another example of optimization could be that a webhook notifies Gitea when an issue is updated. The code triggered by the webhook would download only this issue and call the code created in the second step to update the issue, as if it was in the process of an idempotent migration.

The ForeignReferences table is added to contain local and foreign ID pairs relative to a given repository. It can later be used for pull requests and other artifacts that can be mirrored. Although the foreign id could be added as a single field in issues or pull requests, it would need to be added to all tables that represent something that can be mirrored. Creating a new table makes for a simpler and more generic design. The drawback is that it requires an extra lookup to obtain the information. However, this extra information is only required during migration or mirroring and does not impact the way Gitea currently works.

The foreign identifier of an issue or pull request is similar to the identifier of an external user, which is stored in reactions, issues, etc. as OriginalPosterID and so on. The representation of a user is however different and the ability of users to link their account to an external user at a later time is also a logic that is different from what is involved in mirroring or migrations. For these reasons, despite some commonalities, it is unclear at this time how the two tables (foreign reference and external user) could be merged together.

The ForeignID field is extracted from the issue migration context so that it can be dumped in files with dump-repo and later restored via restore-repo.

The GetAllComments downloader method is introduced to simplify the implementation and not overload the Context for the purpose of pagination. It also clarifies in which context the comments are paginated and in which context they are not.

The Context interface is no longer useful for the purpose of retrieving the LocalID and ForeignID since they are now both available from the PullRequest and Issue struct. The Reviewable and Commentable interfaces replace and serve the same purpose.

The Context data member of PullRequest and Issue becomes a DownloaderContext to clarify that its purpose is not to support in memory operations while the current downloader is acting but is not otherwise persisted. It is, for instance, used by the GitLab downloader to store the IsMergeRequest boolean and sort out issues.

---

[source](https://lab.forgefriends.org/forgefriends/forgefriends/-/merge_requests/36)

Signed-off-by: Loïc Dachary <loic@dachary.org>

Co-authored-by: Loïc Dachary <loic@dachary.org>

* Update the webauthn_credential_id_sequence in Postgres

There is (yet) another problem with v210 in that Postgres will silently allow preset

ID insertions ... but it will not update the sequence value.

This PR simply adds a little step to the end of the v210 migration to update the

sequence number.

Users who have already migrated who find that they cannot insert new

webauthn_credentials into the DB can either run:

```bash

gitea doctor recreate-table webauthn_credential

```

or

```bash

./gitea doctor --run=check-db-consistency --fix

```

which will fix the bad sequence.

Fix#19012

Signed-off-by: Andrew Thornton <art27@cantab.net>

Yet another issue has come up where the logging from SyncMirrors does not provide

enough context. This PR adds more context to these logging events.

Related #19038

Signed-off-by: Andrew Thornton <art27@cantab.net>

The review request feature was added in https://github.com/go-gitea/gitea/pull/10756,

where the doer got explicitly excluded from available reviewers. I don't see a

functionality or security related reason to forbid this case.

As shown by GitHubs implementation, it may be useful to self-request a review,

to be reminded oneselves about reviewing, while communicating to team mates that a

review is missing.

Co-authored-by: delvh <dev.lh@web.de>

* ignore missing comment for user notifications

* instead fix bug in notifications model

* use local variable instead

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: 6543 <6543@obermui.de>

Add new feature to delete issues and pulls via API

Co-authored-by: fnetx <git@fralix.ovh>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: 6543 <6543@obermui.de>

- Add helper method to reduce redundancy

- Expand the scope from displaying days to years

- Reduce irrelevance by not displaying small units (hours, minutes, seconds) when bigger ones apply (years)

* Avoid database lookups for `DescriptionHTML`

- Don't Compose meta's for DescriptionHTML, they are only needed in

order to correctly format and show issue's but it's highly unlikely that

a repository description will refer to a local issue.

Using 125 Connections for 5 seconds: on `/explore/repos`(which is the most

noticeable usage by this function's database lookups):

Before:

Statistics Avg Stdev Max

Reqs/sec 569.41 506.05 2715.00

Latency 214.27ms 16.60ms 294.84ms

HTTP codes:

1xx - 0, 2xx - 2974, 3xx - 0, 4xx - 0, 5xx - 0

others - 0

Throughput: 27.17MB/s

After:

Statistics Avg Stdev Max

Reqs/sec 1585.04 789.84 4144.56

Latency 78.89ms 15.89ms 206.94ms

HTTP codes:

1xx - 0, 2xx - 7975, 3xx - 0, 4xx - 0, 5xx - 0

others - 0

Throughput: 73.85MB/s

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: zeripath <art27@cantab.net>

* Fix page and missing return on unadopted repos API

Page must be 1 if it's not specified and it should return after sending an internal server error.

* Allow ignore pages

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

- Fix regression caused by: f1b1472632

- Don't try to insert a email for Organisation(as they don't have one).

- Resolves#18891

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* logs: add the buffer logger to inspect logs during testing

Signed-off-by: Loïc Dachary <loic@dachary.org>

* migrations: add test for importing pull requests in gitea uploader

Signed-off-by: Loïc Dachary <loic@dachary.org>

* for each git.OpenRepositoryCtx, call Close

* Content is expected to return the content of the log

* test for errors before defer

Co-authored-by: Loïc Dachary <loic@dachary.org>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Following the merging of #17811 teams can now have differing write and readonly permissions, however the assignee list will not include teams which have mixed perms.

Further the org sidebar is no longer helpful as it can't describe these mixed permissions situations.

Fix#18572

Signed-off-by: Andrew Thornton <art27@cantab.net>

We can't depend on `latest` version of gofumpt because the output will

not be stable across versions. Lock it down to the latest version

released yesterday and run it again.

* Use email_address table to check user's email when login with email adress

* Update services/auth/signin.go

* Fix test

* Fix test

* Fix logging in with ldap username != loginname

* Fix if user does not exist yet

* Make more clear this is loginName

* Fix formatting

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: zeripath <art27@cantab.net>

v208.go is seriously broken as it misses an ID() check. We need to no-op and remigrate all of the u2f keys.

See #18756

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Fix display time of milestones

* Move the SecToTime function

From the models/issue_stopwatch.go file to the modules/util package

* Rename the sec_to_time file

* Updated formatting

* Include copyright notice in sec_to_time.go

* Apply PR review suggestions

- Update copyright notice dates to 2022

- Change `1 day 3h 5min 7s` to `1d 3h 5m 7s`

* Rename hrs var and combine conditions

* Update unit tests to match new time pattern

Changed `1min` to `1m`

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Unfortunately credentialIDs in u2f are 255 bytes long which with base32 encoding

becomes 408 bytes. The default size of a xorm string field is only a VARCHAR(255)

This problem is not apparent on SQLite because strings get mapped to TEXT there.

Fix#18727

Signed-off-by: Andrew Thornton <art27@cantab.net>

- Don't let `TypeExternalTracker` or `TypeExternalWiki` influence the

minimal permission, as they won't be higher than read. So even if all

the other ones are write, these 2 will ensure that's not higher than

read.

- Partially resolves#18572 (Point 1,2,5?)

Co-authored-by: zeripath <art27@cantab.net>

There is no need to call UpdateRepoStats in the InsertIssues and

InsertPullRequests function. They are only called during migration by

the CreateIssues and CreateReviews methods of the gitea uploader.

The UpdateRepoStats function will be called by the Finish method of

the gitea uploader after all reviews and issues are inserted. Calling

it before is therefore redundant and the associated SQL requests are

not cheap.

The statistics tests done after inserting an issue or a pull request

are also removed. They predate the implementation of UpdateRepoStats,

back when the calculation of the statistics was an integral part of

the migration function. The UpdateRepoStats is now tested

independantly and these tests are no longer necessary.

Signed-off-by: singuliere <singuliere@autistici.org>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

When calling DumpRepository and RestoreRepository on the same Gitea

instance, the users are preserved: all labels, issues etc. belong to

the external user who is, in this particular case, the local user.

Dead code verifying g.gitServiceType.Name() == "" (i.e. plain git) is

removed. The function is never called because the plain git downloader

does not migrate anything that is associated to a user, by definition.

Errors returned by GetUserIDByExternalUserID are no longer ignored.

The userMap is used when the external user is not kown, which is the

most common case. It was only used when the external user exists

which happens less often and, as a result, every occurence of an

unknown external user required a SQL query.

Signed-off-by: Loïc Dachary <loic@dachary.org>

Co-authored-by: Loïc Dachary <loic@dachary.org>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

- Switch to use `CryptoRandomBytes` instead of `CryptoRandomString`, OAuth's secrets are copied pasted and don't need to avoid dubious characters etc.

- `CryptoRandomBytes` gives  `CryptoRandomString` gives  possible states.

- Add a prefix, such that code scanners can easily grep these in source code.

- 32 Bytes + prefix

* Collaborator trust model should trust collaborators

There was an unintended regression in #17917 which leads to only

repository admin commits being trusted. This PR restores the old logic.

Fix#18501

Signed-off-by: Andrew Thornton <art27@cantab.net>

* COrrect use `UserID` in `SearchTeams`

- Use `UserID` in the `SearchTeams` function, currently it was useless

to pass such information. Now it does a INNER statement to `team_user`

which obtains UserID -> TeamID data.

- Make OrgID optional.

- Resolves#18484

* Seperate searching specific user

* Add condition back

* Use correct struct type

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

* add test coverage for original author conversion during migrations

And create a function to factorize a code snippet that is repeated

five times and would otherwise be more difficult to test and maintain

consistently.

Signed-off-by: Loïc Dachary <loic@dachary.org>

* fix variable scope and int64 formatting

* add missing calls to remapExternalUser and fix misplaced %d

Co-authored-by: Loïc Dachary <loic@dachary.org>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

The endpoint /{username}/{reponame}/milestone/{id} is not currently restricted to

the repo. This PR restricts the milestones to those within the repo.

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Add config option to hide issue events

Adds a config option `HIDE_ISSUE_EVENTS` to hide most issue events (changed labels, milestones, projects...) on the issue detail page.

If this is true, only the following events (comment types) are shown:

* plain comments

* closed/reopned/merged

* reviews

* Make configurable using a list

* Add docs

* Add missing newline

* Fix merge issues

* Allow changes per user settings

* Fix lint

* Rm old docs

* Apply suggestions from code review

* Use bitsets

* Rm comment

* fmt

* Fix lint

* Use variable/constant to provide key

* fmt

* fix lint

* refactor

* Add a prefix for user setting key

* Add license comment

* Add license comment

* Update services/forms/user_form_hidden_comments.go

Co-authored-by: Gusted <williamzijl7@hotmail.com>

* check len == 0

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: 6543 <6543@obermui.de>

This PR continues the work in #17125 by progressively ensuring that git

commands run within the request context.

This now means that the if there is a git repo already open in the context it will be used instead of reopening it.

Signed-off-by: Andrew Thornton <art27@cantab.net>

The CheckRepoStats function missed the following counters:

- label num_closed_issues & num_closed_pulls

- milestone num_closed_issues & num_closed_pulls

The update SQL statements for updating the repository

num_closed_issues & num_closed_pulls fields were repeated in three

functions (repo.CheckRepoStats, migrate.insertIssues and

models.Issue.updateClosedNum) and were moved to a single helper.

The UpdateRepoStats is implemented and called in the Finish migration method so that it happens immediately instead of wating for the

CheckRepoStats to run.

Signed-off-by: Loïc Dachary loic@dachary.org

---

[source](https://lab.forgefriends.org/forgefriends/forgefriends/-/merge_requests/34)

This contains some additional fixes and small nits related to #17957

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Migrate from U2F to Webauthn

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

we don't want reviews to count towards comments, as this needs changes

in other components as well (eg repo stats cron job, etc).

Co-authored-by: 6543 <6543@obermui.de>

* migrations: a deadline at January 1st, 1970 is valid

Do not change the deadline value if it is set to January 1st, 1970.

Setting the deadline to year 9999 when it is zero (which is equal to

January 1st, 1970) modifies a deadline set to January 1st, 1970 which

is a valid date. In addition, setting a date in year 9999 will be

converted to a null date in some cases.

Signed-off-by: Loïc Dachary <loic@dachary.org>

* tests: set milestone.deadline_unix in fixtures

The value of deadline_unix must be set to 253370764800 (i.e. 9999-01-01) in

fixtures, otherwise it will be inserted as null which leads to

unexpected errors. For instance, DumpRepository will store a null

deadline_unix as 0 (i.e. 1970-01-01) and RestoreRepository will change

it to 9999-01-01.

Signed-off-by: Loïc Dachary <loic@dachary.org>

Co-authored-by: Loïc Dachary <loic@dachary.org>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

- Don't use `ioutil` package anymore as it doesn't anything special

anymore since Go 1.16:

```

// As of Go 1.16, the same functionality is now provided

// by package io or package os, and those implementations

// should be preferred in new code.

```

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* Team permission allow different unit has different permission

* Finish the interface and the logic

* Fix lint

* Fix translation

* align center for table cell content

* Fix fixture

* merge

* Fix test

* Add deprecated

* Improve code

* Add tooltip

* Fix swagger

* Fix newline

* Fix tests

* Fix tests

* Fix test

* Fix test

* Max permission of external wiki and issues should be read

* Move team units with limited max level below units table

* Update label and column names

* Some improvements

* Fix lint

* Some improvements

* Fix template variables

* Add permission docs

* improve doc

* Fix fixture

* Fix bug

* Fix some bug

* fix

* gofumpt

* Integration test for migration (#18124)

integrations: basic test for Gitea {dump,restore}-repo

This is a first step for integration testing of DumpRepository and

RestoreRepository. It:

runs a Gitea server,

dumps a repo via DumpRepository to the filesystem,

restores the repo via RestoreRepository from the filesystem,

dumps the restored repository to the filesystem,

compares the first and second dump and expects them to be identical

The verification is trivial and the goal is to add more tests for each

topic of the dump.

Signed-off-by: Loïc Dachary <loic@dachary.org>

* Team permission allow different unit has different permission

* Finish the interface and the logic

* Fix lint

* Fix translation

* align center for table cell content

* Fix fixture

* merge

* Fix test

* Add deprecated

* Improve code

* Add tooltip

* Fix swagger

* Fix newline

* Fix tests

* Fix tests

* Fix test

* Fix test

* Max permission of external wiki and issues should be read

* Move team units with limited max level below units table

* Update label and column names

* Some improvements

* Fix lint

* Some improvements

* Fix template variables

* Add permission docs

* improve doc

* Fix fixture

* Fix bug

* Fix some bug

* Fix bug

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Aravinth Manivannan <realaravinth@batsense.net>

- The current implementation of `RandomString` doesn't give you a most-possible unique randomness. It gives you 6*`length` instead of the possible 8*`length` bits(or as `length`x bytes) randomness. This is because `RandomString` is being limited to a max value of 63, this in order to represent the random byte as a letter/digit.

- The recommendation of pbkdf2 is to use 64+ bit salt, which the `RandomString` doesn't give with a length of 10, instead of increasing 10 to a higher number, this patch adds a new function called `RandomBytes` which does give you the guarentee of 8*`length` randomness and thus corresponding of `length`x bytes randomness.

- Use hexadecimal to store the bytes value in the database, as mentioned, it doesn't play nice in order to convert it to a string. This will always be a length of 32(with `length` being 16).

- When we detect on `Authenticate`(source: db) that a user has the old format of salt, re-hash the password such that the user will have it's password hashed with increased salt.

Thanks to @zeripath for working out the rouge edges from my first commit 😄.