Fix#28761Fix#27884Fix#28093

## Changes

### Rerun all jobs

When rerun all jobs, status of the jobs with `needs` will be set to

`blocked` instead of `waiting`. Therefore, these jobs will not run until

the required jobs are completed.

### Rerun a single job

When a single job is rerun, its dependents should also be rerun, just

like GitHub does

(https://github.com/go-gitea/gitea/issues/28761#issuecomment-2008620820).

In this case, only the specified job will be set to `waiting`, its

dependents will be set to `blocked` to wait the job.

### Show warning if every job has `needs`

If every job in a workflow has `needs`, all jobs will be blocked and no

job can be run. So I add a warning message.

<img

src="https://github.com/go-gitea/gitea/assets/15528715/88f43511-2360-465d-be96-ee92b57ff67b"

width="480px" />

This PR will avoid load pullrequest.Issue twice in pull request list

page. It will reduce x times database queries for those WIP pull

requests.

Partially fix#29585

---------

Co-authored-by: Giteabot <teabot@gitea.io>

This PR fixed a bug when the user switching pages too fast, he will

logout automatically.

The reason is that when the error is context cancelled, the previous

code think user hasn't login then the session will be deleted. Now it

will return the errors but not think it's not login.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Regression of #29493. If a branch has been deleted, repushing it won't

restore it.

Lunny may have noticed that, but I didn't delve into the comment then

overlooked it:

https://github.com/go-gitea/gitea/pull/29493#discussion_r1509046867

The additional comments added are to explain the issue I found during

testing, which are unrelated to the fixes.

When read the code: `pager.AddParam(ctx, "search", "search")`, the

question always comes: What is it doing? Where is the value from? Why

"search" / "search" ?

Now it is clear: `pager.AddParamIfExist("search", ctx.Data["search"])`

Fix#29763

This PR fixes 2 problems with CodeOwner in the pull request.

- Don't use the pull request base branch but merge-base as a diff base to

detect the code owner.

- CodeOwner detection in fork repositories will be disabled because

almost all the fork repositories will not change CODEOWNERS files but it

should not be used on fork repositories' pull requests.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

The branch page for blender project will take 6s because calculating

divergence is very slow.

This PR will add a cache for the branch divergence calculation. So when

the second visit the branch list, it will take only less 200ms.

Refactor the webhook logic, to have the type-dependent processing happen

only in one place.

---

## Current webhook flow

1. An event happens

2. It is pre-processed (depending on the webhook type) and its body is

added to a task queue

3. When the task is processed, some more logic (depending on the webhook

type as well) is applied to make an HTTP request

This means that webhook-type dependant logic is needed in step 2 and 3.

This is cumbersome and brittle to maintain.

Updated webhook flow with this PR:

1. An event happens

2. It is stored as-is and added to a task queue

3. When the task is processed, the event is processed (depending on the

webhook type) to make an HTTP request

So the only webhook-type dependent logic happens in one place (step 3)

which should be much more robust.

## Consequences of the refactor

- the raw event must be stored in the hooktask (until now, the

pre-processed body was stored)

- to ensure that previous hooktasks are correctly sent, a

`payload_version` is added (version 1: the body has already been

pre-process / version 2: the body is the raw event)

So future webhook additions will only have to deal with creating an

http.Request based on the raw event (no need to adjust the code in

multiple places, like currently).

Moreover since this processing happens when fetching from the task

queue, it ensures that the queuing of new events (upon a `git push` for

instance) does not get slowed down by a slow webhook.

As a concrete example, the PR #19307 for custom webhooks, should be

substantially smaller:

- no need to change `services/webhook/deliver.go`

- minimal change in `services/webhook/webhook.go` (add the new webhook

to the map)

- no need to change all the individual webhook files (since with this

refactor the `*webhook_model.Webhook` is provided as argument)

Consider executable files as a valid case when returning a DownloadURL for them.

They are just regular files with the difference being the executable permission bit being set.

Co-authored-by: Gusted <postmaster@gusted.xyz>

After repository commit status has been introduced on dashaboard, the

most top SQL comes from `GetLatestCommitStatusForPairs`.

This PR adds a cache for the repository's default branch's latest

combined commit status. When a new commit status updated, the cache will

be marked as invalid.

<img width="998" alt="image"

src="https://github.com/go-gitea/gitea/assets/81045/76759de7-3a83-4d54-8571-278f5422aed3">

Unlike other async processing in the queue, we should sync branches to

the DB immediately when handling git hook calling. If it fails, users

can see the error message in the output of the git command.

It can avoid potential inconsistency issues, and help #29494.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR made the code simpler, reduced unnecessary database queries and

fixed some warnning for the errors.New .

---------

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Also resolves a warning for current releases

```

| ##[group]GitHub Actions runtime token ACs

| ##[warning]Cannot parse GitHub Actions Runtime Token ACs: "undefined" is not valid JSON

| ##[endgroup]

====>

| ##[group]GitHub Actions runtime token ACs

| ##[endgroup]

```

\* this is an error in v3

References in the docker org:

-

831ca179d3/src/main.ts (L24)

-

7d8b4dc669/src/github.ts (L61)

No known official action of GitHub makes use of this claim.

Current releases throw an error when configure to use actions cache

```

| ERROR: failed to solve: failed to configure gha cache exporter: invalid token without access controls

| ##[error]buildx failed with: ERROR: failed to solve: failed to configure gha cache exporter: invalid token without access controls

```

Part of #23318

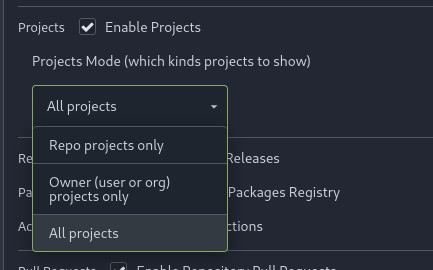

Add menu in repo settings to allow for repo admin to decide not just if

projects are enabled or disabled per repo, but also which kind of

projects (repo-level/owner-level) are enabled. If repo projects

disabled, don't show the projects tab.

---------

Co-authored-by: delvh <dev.lh@web.de>

Fixes: https://github.com/go-gitea/gitea/issues/29498

I don't quite understand this code, but this change does seem to fix the

issue and I tested a number of diffs with it and saw no issue. The

function gets such value if last line is an addition:

```

LastLeftIdx: (int) 0,

LastRightIdx: (int) 47,

LeftIdx: (int) 47,

RightIdx: (int) 48,

```

If it's a deletion, it gets:

```

LastLeftIdx: (int) 47,

LastRightIdx: (int) 0,

LeftIdx: (int) 48,

RightIdx: (int) 47,

```

So I think it's correct to make this check respect both left and right

side.

1. Fix incorrect `HookEventType` for issue-related events in

`IssueChangeAssignee`

2. Add `case "types"` in the `switch` block in `matchPullRequestEvent`

to avoid warning logs

Since `modules/context` has to depend on `models` and many other

packages, it should be moved from `modules/context` to

`services/context` according to design principles. There is no logic

code change on this PR, only move packages.

- Move `code.gitea.io/gitea/modules/context` to

`code.gitea.io/gitea/services/context`

- Move `code.gitea.io/gitea/modules/contexttest` to

`code.gitea.io/gitea/services/contexttest` because of depending on

context

- Move `code.gitea.io/gitea/modules/upload` to

`code.gitea.io/gitea/services/context/upload` because of depending on

context

Some specific events on Gitlab issues and merge requests are stored

separately from comments as "resource state events". With this change,

all relevant resource state events are downloaded during issue and merge

request migration, and converted to comments.

This PR also updates the template used to render comments to add support

for migrated comments of these types.

ref: https://docs.gitlab.com/ee/api/resource_state_events.html

Fixes#26691

Revert #24972

The alpine package manager expects `noarch` packages in the index of

other architectures too.

---------

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fix#14459

The following users can add/remove review requests of a PR

- the poster of the PR

- the owner or collaborators of the repository

- members with read permission on the pull requests unit

GitLab generates "system notes" whenever an event happens within the

platform. Unlike Gitea, those events are stored and retrieved as text

comments with no semantic details. The only way to tell whether a

comment was generated in this manner is the `system` flag on the note

type.

This PR adds detection for a new specific kind of event: Changing the

target branch of a PR. When detected, it is downloaded using Gitea's

type for this event, and eventually uploaded into Gitea in the expected

format, i.e. with no text content in the comment.

This PR also updates the template used to render comments to add support

for migrated comments of this type.

ref:

11bd6dc826/app/services/system_notes/merge_requests_service.rb (L102)

Now we can get object format name from git command line or from the

database repository table. Assume the column is right, we don't need to

read from git command line every time.

This also fixed a possible bug that the object format is wrong when

migrating a sha256 repository from external.

<img width="658" alt="image"

src="https://github.com/go-gitea/gitea/assets/81045/6e9a9dcf-13bf-4267-928b-6bf2c2560423">

### Overview

This is the implementation of Code Frequency page. This feature was

mentioned on these issues: #18262, #7392.

It adds another tab to Activity page called Code Frequency. Code

Frequency tab shows additions and deletions over time since the

repository existed.

Before:

<img width="1296" alt="image"

src="https://github.com/go-gitea/gitea/assets/32161460/2603504f-aee7-4929-a8c4-fb3412a7a0f6">

After:

<img width="1296" alt="image"

src="https://github.com/go-gitea/gitea/assets/32161460/58c03721-729f-4536-a663-9f337f240963">

---

#### Features

- See additions deletions over time since repository existed

- Click on "Additions" or "Deletions" legend to show only one type of

contribution

- Use the same cache from Contributors page so that the loading of data

will be fast once it is cached by visiting either one of the pages

---------

Co-authored-by: Giteabot <teabot@gitea.io>

Fix#29175

Replace #29207

This PR makes some improvements to the `issue_comment` workflow trigger

event.

1. Fix the bug that pull requests cannot trigger `issue_comment`

workflows

2. Previously the `issue_comment` event only supported the `created`

activity type. This PR adds support for the missing `edited` and

`deleted` activity types.

3. Some events (including `issue_comment`, `issues`, etc. ) only trigger

workflows that belong to the workflow file on the default branch. This

PR introduces the `IsDefaultBranchWorkflow` function to check for these

events.

GitLab generates "system notes" whenever an event happens within the

platform. Unlike Gitea, those events are stored and retrieved as text

comments with no semantic details. The only way to tell whether a

comment was generated in this manner is the `system` flag on the note

type.

This PR adds detection for two specific kinds of events: Scheduling and

un-scheduling of automatic merges on a PR. When detected, they are

downloaded using Gitea's type for these events, and eventually uploaded

into Gitea in the expected format, i.e. with no text content in the

comment.

This PR also updates the template used to render comments to add support

for migrated comments of these two types.

ref:

11bd6dc826/app/services/system_notes/merge_requests_service.rb (L6-L17)

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

https://github.com/go-gitea/gitea/pull/27172#discussion_r1493735466

When cleanup artifacts, it removes storage first. If storage is not

exist (maybe delete manually), it gets error and continue loop. It makes

a dead loop if there are a lot pending but non-existing artifacts.

Now it updates db record at first to avoid keep a lot of pending status

artifacts.

Fix#29166

Add support for the following activity types of `pull_request`

- assigned

- unassigned

- review_requested

- review_request_removed

- milestoned

- demilestoned

There is a missing newline when generating the debian apt repo InRelease

file, which results in output like:

```

[...]

Date: Wed, 14 Feb 2024 05:03:01 UTC

Acquire-By-Hash: yesMD5Sum:

51a518dbddcd569ac3e0cebf330c800a 3018 main-dev/binary-amd64/Packages

[...]

```

It appears this would probably result in apt ignoring the

Acquire-By-Hash setting and not using the by-hash functionality,

although I'm not sure how to confirm it.

Old code is not consistent for generating & decoding the JWT secrets.

Now, the callers only need to use 2 consistent functions:

NewJwtSecretWithBase64 and DecodeJwtSecretBase64

And remove a non-common function Base64FixedDecode from util.go

Continuation of https://github.com/go-gitea/gitea/pull/25439. Fixes#847

Before:

<img width="1296" alt="image"

src="https://github.com/go-gitea/gitea/assets/32161460/24571ac8-b254-43c9-b178-97340f0dc8a9">

----

After:

<img width="1296" alt="image"

src="https://github.com/go-gitea/gitea/assets/32161460/c60b2459-9d10-4d42-8d83-d5ef0f45bf94">

---

#### Overview

This is the implementation of a requested feature: Contributors graph

(#847)

It makes Activity page a multi-tab page and adds a new tab called

Contributors. Contributors tab shows the contribution graphs over time

since the repository existed. It also shows per user contribution graphs

for top 100 contributors. Top 100 is calculated based on the selected

contribution type (commits, additions or deletions).

---

#### Demo

(The demo is a bit old but still a good example to show off the main

features)

<video src="https://github.com/go-gitea/gitea/assets/32161460/9f68103f-8145-4cc2-94bc-5546daae7014" controls width="320" height="240">

<a href="https://github.com/go-gitea/gitea/assets/32161460/9f68103f-8145-4cc2-94bc-5546daae7014">Download</a>

</video>

#### Features:

- Select contribution type (commits, additions or deletions)

- See overall and per user contribution graphs for the selected

contribution type

- Zoom and pan on graphs to see them in detail

- See top 100 contributors based on the selected contribution type and

selected time range

- Go directly to users' profile by clicking their name if they are

registered gitea users

- Cache the results so that when the same repository is visited again

fetching data will be faster

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: hiifong <i@hiif.ong>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: yp05327 <576951401@qq.com>

Clarify when "string" should be used (and be escaped), and when

"template.HTML" should be used (no need to escape)

And help PRs like #29059 , to render the error messages correctly.

With this option, it is possible to require a linear commit history with

the following benefits over the next best option `Rebase+fast-forward`:

The original commits continue existing, with the original signatures

continuing to stay valid instead of being rewritten, there is no merge

commit, and reverting commits becomes easier.

Closes#24906

The old code `GetTemplatesFromDefaultBranch(...) ([]*api.IssueTemplate,

map[string]error)` doesn't really follow Golang's habits, then the

second returned value might be misused. For example, the API function

`GetIssueTemplates` incorrectly checked the second returned value and

always responds 500 error.

This PR refactors GetTemplatesFromDefaultBranch to

ParseTemplatesFromDefaultBranch and clarifies its behavior, and fixes the

API endpoint bug, and adds some tests.

And by the way, add proper prefix `X-` for the header generated in

`checkDeprecatedAuthMethods`, because non-standard HTTP headers should

have `X-` prefix, and it is also consistent with the new code in

`GetIssueTemplates`

Outgoing new release e-mail notifications were missing links to the

actual release. An example from Codeberg.org e-mail:

<a href=3D"">View it on Codeberg.org</a>.<br/>

This PR adds `"Link"` context property pointing to the release on the

web interface.

The change was tested using `[mailer] PROTOCOL=dummy`.

Signed-off-by: Wiktor Kwapisiewicz <wiktor@metacode.biz>

Resolves https://github.com/go-gitea/gitea/issues/28704

Example of an entry in the generated `APKINDEX` file:

```

C:Q1xCO3H9LTTEbhKt9G1alSC87I56c=

P:hello

V:2.12-r1

A:x86_64

T:The GNU Hello program produces a familiar, friendly greeting

U:https://www.gnu.org/software/hello/

L:GPL-3.0-or-later

S:15403

I:36864

o:hello

m:

t:1705934118

D:so:libc.musl-x86_64.so.1

p:cmd:hello=2.12-r1

i:foobar=1.0 !baz

k:42

```

the `i:` and `k:` entries are new.

---------

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Fixes#28660

Fixes an admin api bug related to `user.LoginSource`

Fixed `/user/emails` response not identical to GitHub api

This PR unifies the user update methods. The goal is to keep the logic

only at one place (having audit logs in mind). For example, do the

password checks only in one method not everywhere a password is updated.

After that PR is merged, the user creation should be next.

Emails from Gitea comments do not contain the username of the commenter

anywhere, only their display name, so it is not possible to verify who

made a comment from the email itself:

From: "Alice" <email@gitea>

X-Gitea-Sender: Alice

X-Gitea-Recipient: Bob

X-GitHub-Sender: Alice

X-GitHub-Recipient: Bob

This comment looks like it's from @alice.

The X-Gitea/X-GitHub headers also use display names, which is not very

reliable for filtering, and inconsistent with GitHub's behavior:

X-GitHub-Sender: lunny

X-GitHub-Recipient: gwymor

This change includes both the display name and username in the From

header, and switches the other headers from display name to username:

From: "Alice (@fakealice)" <email@gitea>

X-Gitea-Sender: fakealice

X-Gitea-Recipient: bob

X-GitHub-Sender: fakealice

X-GitHub-Recipient: bob

This comment looks like it's from @alice.

This change allows act_runner / actions_runner to use jwt tokens for

`ACTIONS_RUNTIME_TOKEN` that are compatible with

actions/upload-artifact@v4.

The official Artifact actions are now validating and extracting the jwt

claim scp to get the runid and jobid, the old artifact backend also

needs to accept the same token jwt.

---

Related to #28853

I'm not familar with the auth system, maybe you know how to improve this

I have tested

- the jwt token is a valid token for artifact uploading

- the jwt token can be parsed by actions/upload-artifact@v4 and passes

their scp claim validation

Next steps would be a new artifacts@v4 backend.

~~I'm linking the act_runner change soonish.~~

act_runner change to make the change effective and use jwt tokens

<https://gitea.com/gitea/act_runner/pulls/471>

In #28691, schedule plans will be deleted when a repo's actions unit is

disabled. But when the unit is enabled, the schedule plans won't be

created again.

This PR fixes the bug. The schedule plans will be created again when the

actions unit is re-enabled

## Purpose

This is a refactor toward building an abstraction over managing git

repositories.

Afterwards, it does not matter anymore if they are stored on the local

disk or somewhere remote.

## What this PR changes

We used `git.OpenRepository` everywhere previously.

Now, we should split them into two distinct functions:

Firstly, there are temporary repositories which do not change:

```go

git.OpenRepository(ctx, diskPath)

```

Gitea managed repositories having a record in the database in the

`repository` table are moved into the new package `gitrepo`:

```go

gitrepo.OpenRepository(ctx, repo_model.Repo)

```

Why is `repo_model.Repository` the second parameter instead of file

path?

Because then we can easily adapt our repository storage strategy.

The repositories can be stored locally, however, they could just as well

be stored on a remote server.

## Further changes in other PRs

- A Git Command wrapper on package `gitrepo` could be created. i.e.

`NewCommand(ctx, repo_model.Repository, commands...)`. `git.RunOpts{Dir:

repo.RepoPath()}`, the directory should be empty before invoking this

method and it can be filled in the function only. #28940

- Remove the `RepoPath()`/`WikiPath()` functions to reduce the

possibility of mistakes.

---------

Co-authored-by: delvh <dev.lh@web.de>

Fixes#28699

This PR implements the `MigrateRepository` method for `actionsNotifier`

to detect the schedules from the workflow files in the migrated

repository.

The method can't be called with an outer transaction because if the user

is not a collaborator the outer transaction will be rolled back even if

the inner transaction uses the no-error path.

`has == 0` leads to `return nil` which cancels the transaction. A

standalone call of this method does nothing but if used with an outer

transaction, that will be canceled.

Sometimes you need to work on a feature which depends on another (unmerged) feature.

In this case, you may create a PR based on that feature instead of the main branch.

Currently, such PRs will be closed without the possibility to reopen in case the parent feature is merged and its branch is deleted.

Automatic target branch change make life a lot easier in such cases.

Github and Bitbucket behave in such way.

Example:

$PR_1$: main <- feature1

$PR_2$: feature1 <- feature2

Currently, merging $PR_1$ and deleting its branch leads to $PR_2$ being closed without the possibility to reopen.

This is both annoying and loses the review history when you open a new PR.

With this change, $PR_2$ will change its target branch to main ($PR_2$: main <- feature2) after $PR_1$ has been merged and its branch has been deleted.

This behavior is enabled by default but can be disabled.

For security reasons, this target branch change will not be executed when merging PRs targeting another repo.

Fixes#27062Fixes#18408

---------

Co-authored-by: Denys Konovalov <kontakt@denyskon.de>

Co-authored-by: delvh <dev.lh@web.de>

Fixes#22236

---

Error occurring currently while trying to revert commit using read-tree

-m approach:

> 2022/12/26 16:04:43 ...rvices/pull/patch.go:240:AttemptThreeWayMerge()

[E] [63a9c61a] Unable to run read-tree -m! Error: exit status 128 -

fatal: this operation must be run in a work tree

> - fatal: this operation must be run in a work tree

We need to clone a non-bare repository for `git read-tree -m` to work.

bb371aee6e

adds support to create a non-bare cloned temporary upload repository.

After cloning a non-bare temporary upload repository, we [set default

index](https://github.com/go-gitea/gitea/blob/main/services/repository/files/cherry_pick.go#L37)

(`git read-tree HEAD`).

This operation ends up resetting the git index file (see investigation

details below), due to which, we need to call `git update-index

--refresh` afterward.

Here's the diff of the index file before and after we execute

SetDefaultIndex: https://www.diffchecker.com/hyOP3eJy/

Notice the **ctime**, **mtime** are set to 0 after SetDefaultIndex.

You can reproduce the same behavior using these steps:

```bash

$ git clone https://try.gitea.io/me-heer/test.git -s -b main

$ cd test

$ git read-tree HEAD

$ git read-tree -m 1f085d7ed8 1f085d7ed8 9933caed00

error: Entry '1' not uptodate. Cannot merge.

```

After which, we can fix like this:

```

$ git update-index --refresh

$ git read-tree -m 1f085d7ed8 1f085d7ed8 9933caed00

```

As more and more options can be set for creating the repository, I don't

think we should put all of them into the creation web page which will

make things look complicated and confusing.

And I think we need some rules about how to decide which should/should

not be put in creating a repository page. One rule I can imagine is if

this option can be changed later and it's not a MUST on the creation,

then it can be removed on the page. So I found trust model is the first

one.

This PR removed the trust model selections on creating a repository web

page and kept others as before.

This is also a preparation for #23894 which will add a choice about SHA1

or SHA256 that cannot be changed once the repository created.

Fixes#26548

This PR refactors the rendering of markup links. The old code uses

`strings.Replace` to change some urls while the new code uses more

context to decide which link should be generated.

The added tests should ensure the same output for the old and new

behaviour (besides the bug).

We may need to refactor the rendering a bit more to make it clear how

the different helper methods render the input string. There are lots of

options (resolve links / images / mentions / git hashes / emojis / ...)

but you don't really know what helper uses which options. For example,

we currently support images in the user description which should not be

allowed I think:

<details>

<summary>Profile</summary>

https://try.gitea.io/KN4CK3R

</details>

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fixes#27114.

* In Gitea 1.12 (#9532), a "dismiss stale approvals" branch protection

setting was introduced, for ignoring stale reviews when verifying the

approval count of a pull request.

* In Gitea 1.14 (#12674), the "dismiss review" feature was added.

* This caused confusion with users (#25858), as "dismiss" now means 2

different things.

* In Gitea 1.20 (#25882), the behavior of the "dismiss stale approvals"

branch protection was modified to actually dismiss the stale review.

For some users this new behavior of dismissing the stale reviews is not

desirable.

So this PR reintroduces the old behavior as a new "ignore stale

approvals" branch protection setting.

---------

Co-authored-by: delvh <dev.lh@web.de>

Fix#28157

This PR fix the possible bugs about actions schedule.

## The Changes

- Move `UpdateRepositoryUnit` and `SetRepoDefaultBranch` from models to

service layer

- Remove schedules plan from database and cancel waiting & running

schedules tasks in this repository when actions unit has been disabled

or global disabled.

- Remove schedules plan from database and cancel waiting & running

schedules tasks in this repository when default branch changed.

- If there's a error with the Git command in `checkIfPRContentChanged`

the stderr wasn't concatendated to the error, which results in still not

knowing why an error happend.

- Adds concatenation for stderr to the returned error.

- Ref: https://codeberg.org/forgejo/forgejo/issues/2077

Co-authored-by: Gusted <postmaster@gusted.xyz>

I noticed the `BuildAllRepositoryFiles` function under the Alpine folder

is unused and I thought it was a bug.

But I'm not sure about this. Was it on purpose?

#28361 introduced `syncBranchToDB` in `CreateNewBranchFromCommit`. This

PR will revert the change because it's unnecessary. Every push will

already be checked by `syncBranchToDB`.

This PR also created a test to ensure it's right.

Introduce the new generic deletion methods

- `func DeleteByID[T any](ctx context.Context, id int64) (int64, error)`

- `func DeleteByIDs[T any](ctx context.Context, ids ...int64) error`

- `func Delete[T any](ctx context.Context, opts FindOptions) (int64,

error)`

So, we no longer need any specific deletion method and can just use

the generic ones instead.

Replacement of #28450Closes#28450

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Using the Go Official tool `golang.org/x/tools/cmd/deadcode@latest`

mentioned by [go blog](https://go.dev/blog/deadcode).

Just use `deadcode .` in the project root folder and it gives a list of

unused functions. Though it has some false alarms.

This PR removes dead code detected in `models/issues`.

Nowadays, cache will be used on almost everywhere of Gitea and it cannot

be disabled, otherwise some features will become unaviable.

Then I think we can just remove the option for cache enable. That means

cache cannot be disabled.

But of course, we can still use cache configuration to set how should

Gitea use the cache.

The 4 functions are duplicated, especially as interface methods. I think

we just need to keep `MustID` the only one and remove other 3.

```

MustID(b []byte) ObjectID

MustIDFromString(s string) ObjectID

NewID(b []byte) (ObjectID, error)

NewIDFromString(s string) (ObjectID, error)

```

Introduced the new interfrace method `ComputeHash` which will replace

the interface `HasherInterface`. Now we don't need to keep two

interfaces.

Reintroduced `git.NewIDFromString` and `git.MustIDFromString`. The new

function will detect the hash length to decide which objectformat of it.

If it's 40, then it's SHA1. If it's 64, then it's SHA256. This will be

right if the commitID is a full one. So the parameter should be always a

full commit id.

@AdamMajer Please review.

Update golang.org/x/crypto for CVE-2023-48795 and update other packages.

`go-git` is not updated because it needs time to figure out why some

tests fail.

- Remove `ObjectFormatID`

- Remove function `ObjectFormatFromID`.

- Use `Sha1ObjectFormat` directly but not a pointer because it's an

empty struct.

- Store `ObjectFormatName` in `repository` struct

Refactor Hash interfaces and centralize hash function. This will allow

easier introduction of different hash function later on.

This forms the "no-op" part of the SHA256 enablement patch.

## Changes

- Add deprecation warning to `Token` and `AccessToken` authentication

methods in swagger.

- Add deprecation warning header to API response. Example:

```

HTTP/1.1 200 OK

...

Warning: token and access_token API authentication is deprecated

...

```

- Add setting `DISABLE_QUERY_AUTH_TOKEN` to reject query string auth

tokens entirely. Default is `false`

## Next steps

- `DISABLE_QUERY_AUTH_TOKEN` should be true in a subsequent release and

the methods should be removed in swagger

- `DISABLE_QUERY_AUTH_TOKEN` should be removed and the implementation of

the auth methods in question should be removed

## Open questions

- Should there be further changes to the swagger documentation?

Deprecation is not yet supported for security definitions (coming in

[OpenAPI Spec version

3.2.0](https://github.com/OAI/OpenAPI-Specification/issues/2506))

- Should the API router logger sanitize urls that use `token` or

`access_token`? (This is obviously an insufficient solution on its own)

---------

Co-authored-by: delvh <dev.lh@web.de>

- Currently there's code to recover gracefully from panics that happen

within the execution of cron tasks. However this recover code wasn't

being run, because `RunWithShutdownContext` also contains code to

recover from any panic and then gracefully shutdown Forgejo. Because

`RunWithShutdownContext` registers that code as last, that would get run

first which in this case is not behavior that we want.

- Move the recover code to inside the function, so that is run first

before `RunWithShutdownContext`'s recover code (which is now a noop).

Fixes: https://codeberg.org/forgejo/forgejo/issues/1910

Co-authored-by: Gusted <postmaster@gusted.xyz>

Fix#28056

This PR will check whether the repo has zero branch when pushing a

branch. If that, it means this repository hasn't been synced.

The reason caused that is after user upgrade from v1.20 -> v1.21, he

just push branches without visit the repository user interface. Because

all repositories routers will check whether a branches sync is necessary

but push has not such check.

For every repository, it has two states, synced or not synced. If there

is zero branch for a repository, then it will be assumed as non-sync

state. Otherwise, it's synced state. So if we think it's synced, we just

need to update branch/insert new branch. Otherwise do a full sync. So

that, for every push, there will be almost no extra load added. It's

high performance than yours.

For the implementation, we in fact will try to update the branch first,

if updated success with affect records > 0, then all are done. Because

that means the branch has been in the database. If no record is

affected, that means the branch does not exist in database. So there are

two possibilities. One is this is a new branch, then we just need to

insert the record. Another is the branches haven't been synced, then we

need to sync all the branches into database.

The function `GetByBean` has an obvious defect that when the fields are

empty values, it will be ignored. Then users will get a wrong result

which is possibly used to make a security problem.

To avoid the possibility, this PR removed function `GetByBean` and all

references.

And some new generic functions have been introduced to be used.

The recommand usage like below.

```go

// if query an object according id

obj, err := db.GetByID[Object](ctx, id)

// query with other conditions

obj, err := db.Get[Object](ctx, builder.Eq{"a": a, "b":b})

```

- Push commits updates are run in a queue and updates can come from less

traceable places such as Git over SSH, therefor add more information

about on which repository the pushUpdate failed.

Refs: https://codeberg.org/forgejo/forgejo/pulls/1723

(cherry picked from commit 37ab9460394800678d2208fed718e719d7a5d96f)

Co-authored-by: Gusted <postmaster@gusted.xyz>

- Say to the binding middleware which locale should be used for the

required error.

- Resolves https://codeberg.org/forgejo/forgejo/issues/1683

(cherry picked from commit 5a2d7966127b5639332038e9925d858ab54fc360)

Co-authored-by: Gusted <postmaster@gusted.xyz>

Changed behavior to calculate package quota limit using package `creator

ID` instead of `owner ID`.

Currently, users are allowed to create an unlimited number of

organizations, each of which has its own package limit quota, resulting

in the ability for users to have unlimited package space in different

organization scopes. This fix will calculate package quota based on

`package version creator ID` instead of `package version owner ID`

(which might be organization), so that users are not allowed to take

more space than configured package settings.

Also, there is a side case in which users can publish packages to a

specific package version, initially published by different user, taking

that user package size quota. Version in fix should be better because

the total amount of space is limited to the quota for users sharing the

same organization scope.

Fixes https://codeberg.org/forgejo/forgejo/issues/1458

Some mails such as issue creation mails are missing the reply-to-comment

address. This PR fixes that and specifies which comment types should get

a reply-possibility.

Fixes#27819

We have support for two factor logins with the normal web login and with

basic auth. For basic auth the two factor check was implemented at three

different places and you need to know that this check is necessary. This

PR moves the check into the basic auth itself.

- On user deletion, delete action runners that the user has created.

- Add a database consistency check to remove action runners that have

nonexistent belonging owner.

- Resolves https://codeberg.org/forgejo/forgejo/issues/1720

(cherry picked from commit 009ca7223dab054f7f760b7ccae69e745eebfabb)

Co-authored-by: Gusted <postmaster@gusted.xyz>

The steps to reproduce it.

First, create a new oauth2 source.

Then, a user login with this oauth2 source.

Disable the oauth2 source.

Visit users -> settings -> security, 500 will be displayed.

This is because this page only load active Oauth2 sources but not all

Oauth2 sources.

After many refactoring PRs for the "locale" and "template context

function", now the ".locale" is not needed for web templates any more.

This PR does a clean up for:

1. Remove `ctx.Data["locale"]` for web context.

2. Use `ctx.Locale` in `500.tmpl`, for consistency.

3. Add a test check for `500 page` locale usage.

4. Remove the `Str2html` and `DotEscape` from mail template context

data, they are copy&paste errors introduced by #19169 and #16200 . These

functions are template functions (provided by the common renderer), but

not template data variables.

5. Make email `SendAsync` function mockable (I was planning to add more

tests but it would make this PR much too complex, so the tests could be

done in another PR)

Due to a bug in the GitLab API, the diff_refs field is populated in the

response when fetching an individual merge request, but not when

fetching a list of them. That field is used to populate the merge base

commit SHA.

While there is detection for the merge base even when not populated by

the downloader, that detection is not flawless. Specifically, when a

GitLab merge request has a single commit, and gets merged with the

squash strategy, the base branch will be fast-forwarded instead of a

separate squash or merge commit being created. The merge base detection

attempts to find the last commit on the base branch that is also on the

PR branch, but in the fast-forward case that is the PR's only commit.

Assuming the head commit is also the merge base results in the import of

a PR with 0 commits and no diff.

This PR uses the individual merge request endpoint to fetch merge

request data with the diff_refs field. With its data, the base merge

commit can be properly set, which—by not relying on the detection

mentioned above—correctly imports PRs that were "merged" by

fast-forwarding the base branch.

ref: https://gitlab.com/gitlab-org/gitlab/-/issues/29620

Before this PR, the PR migration code populates Gitea's MergedCommitID

field by using GitLab's merge_commit_sha field. However, that field is

only populated when the PR was merged using a merge strategy. When a

squash strategy is used, squash_commit_sha is populated instead.

Given that Gitea does not keep track of merge and squash commits

separately, this PR simply populates Gitea's MergedCommitID by using

whichever field is present in the GitLab API response.

Hello there,

Cargo Index over HTTP is now prefered over git for package updates: we

should not force users who do not need the GIT repo to have the repo

created/updated on each publish (it can still be created in the packages

settings).

The current behavior when publishing is to check if the repo exist and

create it on the fly if not, then update it's content.

Cargo HTTP Index does not rely on the repo itself so this will be

useless for everyone not using the git protocol for cargo registry.

This PR only disable the creation on the fly of the repo when publishing

a crate.

This is linked to #26844 (error 500 when trying to publish a crate if

user is missing write access to the repo) because it's now optional.

---------

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

When `webhook.PROXY_URL` has been set, the old code will check if the

proxy host is in `ALLOWED_HOST_LIST` or reject requests through the

proxy. It requires users to add the proxy host to `ALLOWED_HOST_LIST`.

However, it actually allows all requests to any port on the host, when

the proxy host is probably an internal address.

But things may be even worse. `ALLOWED_HOST_LIST` doesn't really work

when requests are sent to the allowed proxy, and the proxy could forward

them to any hosts.

This PR fixes it by:

- If the proxy has been set, always allow connectioins to the host and

port.

- Check `ALLOWED_HOST_LIST` before forwarding.

Closes#27455

> The mechanism responsible for long-term authentication (the 'remember

me' cookie) uses a weak construction technique. It will hash the user's

hashed password and the rands value; it will then call the secure cookie

code, which will encrypt the user's name with the computed hash. If one

were able to dump the database, they could extract those two values to

rebuild that cookie and impersonate a user. That vulnerability exists

from the date the dump was obtained until a user changed their password.

>

> To fix this security issue, the cookie could be created and verified

using a different technique such as the one explained at

https://paragonie.com/blog/2015/04/secure-authentication-php-with-long-term-persistence#secure-remember-me-cookies.

The PR removes the now obsolete setting `COOKIE_USERNAME`.

assert.Fail() will continue to execute the code while assert.FailNow()

not. I thought those uses of assert.Fail() should exit immediately.

PS: perhaps it's a good idea to use

[require](https://pkg.go.dev/github.com/stretchr/testify/require)

somewhere because the assert package's default behavior does not exit

when an error occurs, which makes it difficult to find the root error

reason.

- Currently in the cron tasks, the 'Previous Time' only displays the

previous time of when the cron library executes the function, but not

any of the manual executions of the task.

- Store the last run's time in memory in the Task struct and use that,

when that time is later than time that the cron library has executed

this task.

- This ensures that if an instance admin manually starts a task, there's

feedback that this task is/has been run, because the task might be run

that quick, that the status icon already has been changed to an

checkmark,

- Tasks that are executed at startup now reflect this as well, as the

time of the execution of that task on startup is now being shown as

'Previous Time'.

- Added integration tests for the API part, which is easier to test

because querying the HTML table of cron tasks is non-trivial.

- Resolves https://codeberg.org/forgejo/forgejo/issues/949

(cherry picked from commit fd34fdac1408ece6b7d9fe6a76501ed9a45d06fa)

---------

Co-authored-by: Gusted <postmaster@gusted.xyz>

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: silverwind <me@silverwind.io>

This pull request is a minor code cleanup.

From the Go specification (https://go.dev/ref/spec#For_range):

> "1. For a nil slice, the number of iterations is 0."

> "3. If the map is nil, the number of iterations is 0."

`len` returns 0 if the slice or map is nil

(https://pkg.go.dev/builtin#len). Therefore, checking `len(v) > 0`

before a loop is unnecessary.

---

At the time of writing this pull request, there wasn't a lint rule that

catches these issues. The closest I could find is

https://staticcheck.dev/docs/checks/#S103

Signed-off-by: Eng Zer Jun <engzerjun@gmail.com>

With this PR we added the possibility to configure the Actions timeouts

values for killing tasks/jobs.

Particularly this enhancement is closely related to the `act_runner`

configuration reported below:

```

# The timeout for a job to be finished.

# Please note that the Gitea instance also has a timeout (3h by default) for the job.

# So the job could be stopped by the Gitea instance if it's timeout is shorter than this.

timeout: 3h

```

---

Setting the corresponding key in the INI configuration file, it is

possible to let jobs run for more than 3 hours.

Signed-off-by: Francesco Antognazza <francesco.antognazza@gmail.com>

- There's no need for `In` to be used, as it's a single parameter that's

being passed.

Refs: https://codeberg.org/forgejo/forgejo/pulls/1521

(cherry picked from commit 4a4955f43ae7fc50cfe3b48409a0a10c82625a19)

Co-authored-by: Gusted <postmaster@gusted.xyz>

When the user does not set a username lookup condition, LDAP will get an

empty string `""` for the user, hence the following code

```

if isExist, err := user_model.IsUserExist(db.DefaultContext, 0, sr.Username)

```

The user presence determination will always be nonexistent, so updates

to user information will never be performed.

Fix#27049