Close#31801. Follow #31761.

Since there are so many benefits of compression and there are no reports

of related issues after weeks, it should be fine to enable compression

by default.

This will allow instance admins to view signup pattern patterns for

public instances. It is modelled after discourse, mastodon, and

MediaWiki's approaches.

Note: This has privacy implications, but as the above-stated open-source

projects take this approach, especially MediaWiki, which I have no doubt

looked into this thoroughly, it is likely okay for us, too. However, I

would be appreciative of any feedback on how this could be improved.

---------

Co-authored-by: Giteabot <teabot@gitea.io>

Replace #32001.

To prevent the context cache from being misused for long-term work

(which would result in using invalid cache without awareness), the

context cache is designed to exist for a maximum of 10 seconds. This

leads to many false reports, especially in the case of slow SQL.

This PR increases it to 5 minutes to reduce false reports.

5 minutes is not a very safe value, as a lot of changes may have

occurred within that time frame. However, as far as I know, there has

not been a case of misuse of context cache discovered so far, so I think

5 minutes should be OK.

Please note that after this PR, if warning logs are found again, it

should get attention, at that time it can be almost 100% certain that it

is a misuse.

https://github.com/go-fed/httpsig seems to be unmaintained.

Switch to github.com/42wim/httpsig which has removed deprecated crypto

and default sha256 signing for ssh rsa.

No impact for those that use ed25519 ssh certificates.

This is a breaking change for:

- gitea.com/gitea/tea (go-sdk) - I'll be sending a PR there too

- activitypub using deprecated crypto (is this actually used?)

Follow #31908. The main refactor is that it has removed the returned

context of `Lock`.

The returned context of `Lock` in old code is to provide a way to let

callers know that they have lost the lock. But in most cases, callers

shouldn't cancel what they are doing even it has lost the lock. And the

design would confuse developers and make them use it incorrectly.

See the discussion history:

https://github.com/go-gitea/gitea/pull/31813#discussion_r1732041513 and

https://github.com/go-gitea/gitea/pull/31813#discussion_r1734078998

It's a breaking change, but since the new module hasn't been used yet, I

think it's OK to not add the `pr/breaking` label.

## Design principles

It's almost copied from #31908, but with some changes.

### Use spinlock even in memory implementation (unchanged)

In actual use cases, users may cancel requests. `sync.Mutex` will block

the goroutine until the lock is acquired even if the request is

canceled. And the spinlock is more suitable for this scenario since it's

possible to give up the lock acquisition.

Although the spinlock consumes more CPU resources, I think it's

acceptable in most cases.

### Do not expose the mutex to callers (unchanged)

If we expose the mutex to callers, it's possible for callers to reuse

the mutex, which causes more complexity.

For example:

```go

lock := GetLocker(key)

lock.Lock()

// ...

// even if the lock is unlocked, we cannot GC the lock,

// since the caller may still use it again.

lock.Unlock()

lock.Lock()

// ...

lock.Unlock()

// callers have to GC the lock manually.

RemoveLocker(key)

```

That's why

https://github.com/go-gitea/gitea/pull/31813#discussion_r1721200549

In this PR, we only expose `ReleaseFunc` to callers. So callers just

need to call `ReleaseFunc` to release the lock, and do not need to care

about the lock's lifecycle.

```go

release, err := locker.Lock(ctx, key)

if err != nil {

return err

}

// ...

release()

// if callers want to lock again, they have to re-acquire the lock.

release, err := locker.Lock(ctx, key)

// ...

```

In this way, it's also much easier for redis implementation to extend

the mutex automatically, so that callers do not need to care about the

lock's lifecycle. See also

https://github.com/go-gitea/gitea/pull/31813#discussion_r1722659743

### Use "release" instead of "unlock" (unchanged)

For "unlock", it has the meaning of "unlock an acquired lock". So it's

not acceptable to call "unlock" when failed to acquire the lock, or call

"unlock" multiple times. It causes more complexity for callers to decide

whether to call "unlock" or not.

So we use "release" instead of "unlock" to make it clear. Whether the

lock is acquired or not, callers can always call "release", and it's

also safe to call "release" multiple times.

But the code DO NOT expect callers to not call "release" after acquiring

the lock. If callers forget to call "release", it will cause resource

leak. That's why it's always safe to call "release" without extra

checks: to avoid callers to forget to call it.

### Acquired locks could be lost, but the callers shouldn't stop

Unlike `sync.Mutex` which will be locked forever once acquired until

calling `Unlock`, for distributed lock, the acquired lock could be lost.

For example, the caller has acquired the lock, and it holds the lock for

a long time since auto-extending is working for redis. However, it lost

the connection to the redis server, and it's impossible to extend the

lock anymore.

In #31908, it will cancel the context to make the operation stop, but

it's not safe. Many operations are not revert-able. If they have been

interrupted, then the instance goes corrupted. So `Lock` won't return

`ctx` anymore in this PR.

### Multiple ways to use the lock

1. Regular way

```go

release, err := Lock(ctx, key)

if err != nil {

return err

}

defer release()

// ...

```

2. Early release

```go

release, err := Lock(ctx, key)

if err != nil {

return err

}

defer release()

// ...

// release the lock earlier

release()

// continue to do something else

// ...

```

3. Functional way

```go

if err := LockAndDo(ctx, key, func(ctx context.Context) error {

// ...

return nil

}); err != nil {

return err

}

```

To help #31813, but do not replace it, since this PR just introduces the

new module but misses some work:

- New option in settings. `#31813` has done it.

- Use the locks in business logic. `#31813` has done it.

So I think the most efficient way is to merge this PR first (if it's

acceptable) and then finish #31813.

## Design principles

### Use spinlock even in memory implementation

In actual use cases, users may cancel requests. `sync.Mutex` will block

the goroutine until the lock is acquired even if the request is

canceled. And the spinlock is more suitable for this scenario since it's

possible to give up the lock acquisition.

Although the spinlock consumes more CPU resources, I think it's

acceptable in most cases.

### Do not expose the mutex to callers

If we expose the mutex to callers, it's possible for callers to reuse

the mutex, which causes more complexity.

For example:

```go

lock := GetLocker(key)

lock.Lock()

// ...

// even if the lock is unlocked, we cannot GC the lock,

// since the caller may still use it again.

lock.Unlock()

lock.Lock()

// ...

lock.Unlock()

// callers have to GC the lock manually.

RemoveLocker(key)

```

That's why

https://github.com/go-gitea/gitea/pull/31813#discussion_r1721200549

In this PR, we only expose `ReleaseFunc` to callers. So callers just

need to call `ReleaseFunc` to release the lock, and do not need to care

about the lock's lifecycle.

```go

_, release, err := locker.Lock(ctx, key)

if err != nil {

return err

}

// ...

release()

// if callers want to lock again, they have to re-acquire the lock.

_, release, err := locker.Lock(ctx, key)

// ...

```

In this way, it's also much easier for redis implementation to extend

the mutex automatically, so that callers do not need to care about the

lock's lifecycle. See also

https://github.com/go-gitea/gitea/pull/31813#discussion_r1722659743

### Use "release" instead of "unlock"

For "unlock", it has the meaning of "unlock an acquired lock". So it's

not acceptable to call "unlock" when failed to acquire the lock, or call

"unlock" multiple times. It causes more complexity for callers to decide

whether to call "unlock" or not.

So we use "release" instead of "unlock" to make it clear. Whether the

lock is acquired or not, callers can always call "release", and it's

also safe to call "release" multiple times.

But the code DO NOT expect callers to not call "release" after acquiring

the lock. If callers forget to call "release", it will cause resource

leak. That's why it's always safe to call "release" without extra

checks: to avoid callers to forget to call it.

### Acquired locks could be lost

Unlike `sync.Mutex` which will be locked forever once acquired until

calling `Unlock`, in the new module, the acquired lock could be lost.

For example, the caller has acquired the lock, and it holds the lock for

a long time since auto-extending is working for redis. However, it lost

the connection to the redis server, and it's impossible to extend the

lock anymore.

If the caller don't stop what it's doing, another instance which can

connect to the redis server could acquire the lock, and do the same

thing, which could cause data inconsistency.

So the caller should know what happened, the solution is to return a new

context which will be canceled if the lock is lost or released:

```go

ctx, release, err := locker.Lock(ctx, key)

if err != nil {

return err

}

defer release()

// ...

DoSomething(ctx)

// the lock is lost now, then ctx has been canceled.

// Failed, since ctx has been canceled.

DoSomethingElse(ctx)

```

### Multiple ways to use the lock

1. Regular way

```go

ctx, release, err := Lock(ctx, key)

if err != nil {

return err

}

defer release()

// ...

```

2. Early release

```go

ctx, release, err := Lock(ctx, key)

if err != nil {

return err

}

defer release()

// ...

// release the lock earlier and reset the context back

ctx = release()

// continue to do something else

// ...

```

3. Functional way

```go

if err := LockAndDo(ctx, key, func(ctx context.Context) error {

// ...

return nil

}); err != nil {

return err

}

```

When opening a repository, it will call `ensureValidRepository` and also

`CatFileBatch`. But sometimes these will not be used until repository

closed. So it's a waste of CPU to invoke 3 times git command for every

open repository.

This PR removed all of these from `OpenRepository` but only kept

checking whether the folder exists. When a batch is necessary, the

necessary functions will be invoked.

fix#23668

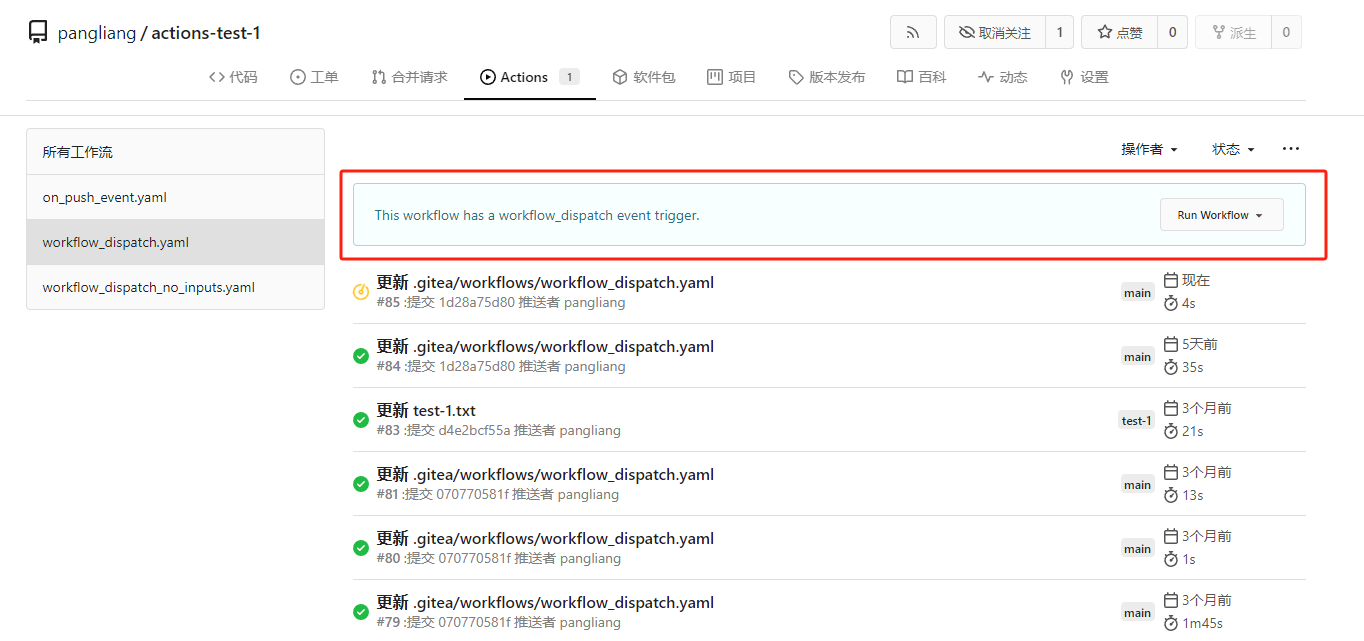

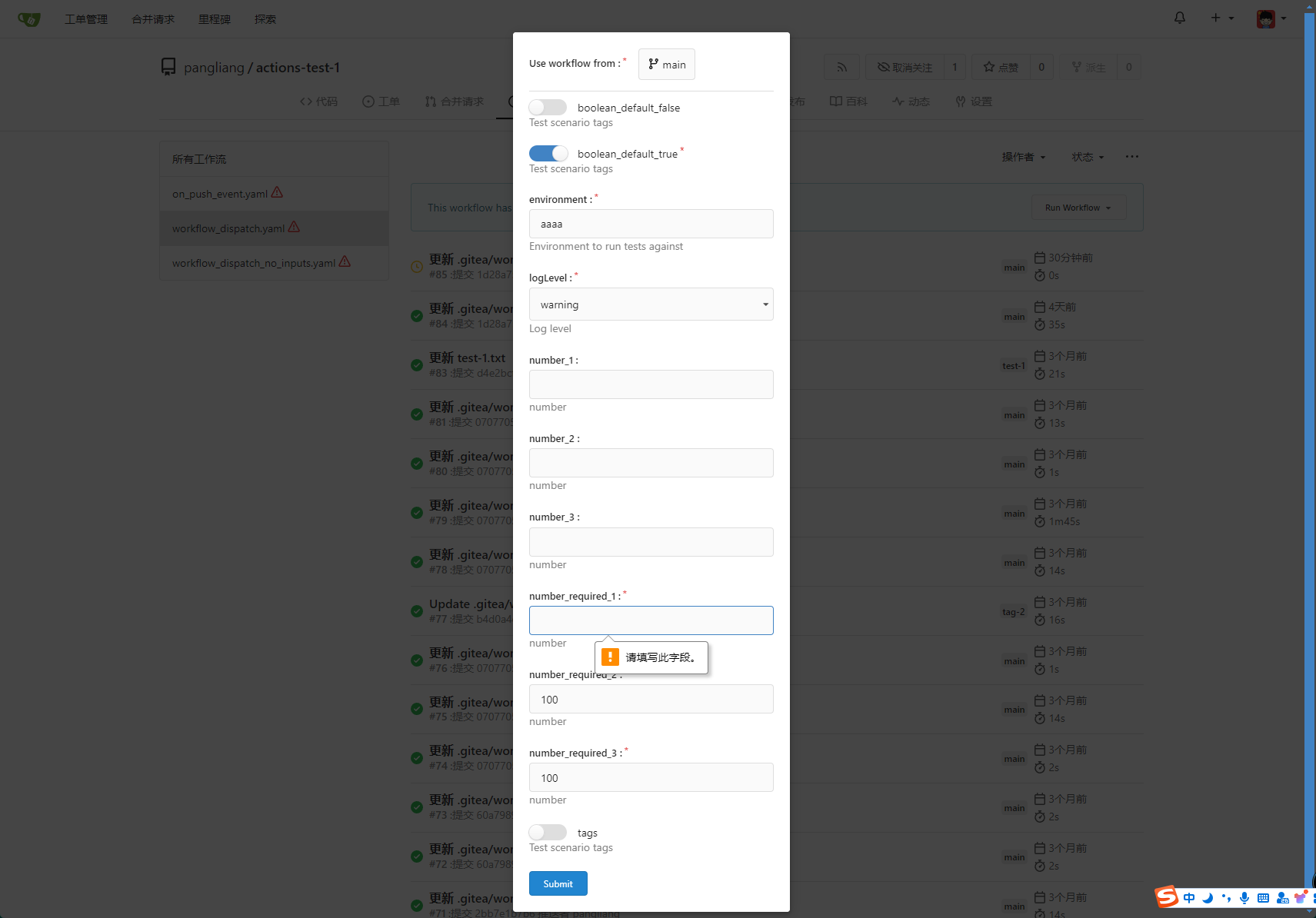

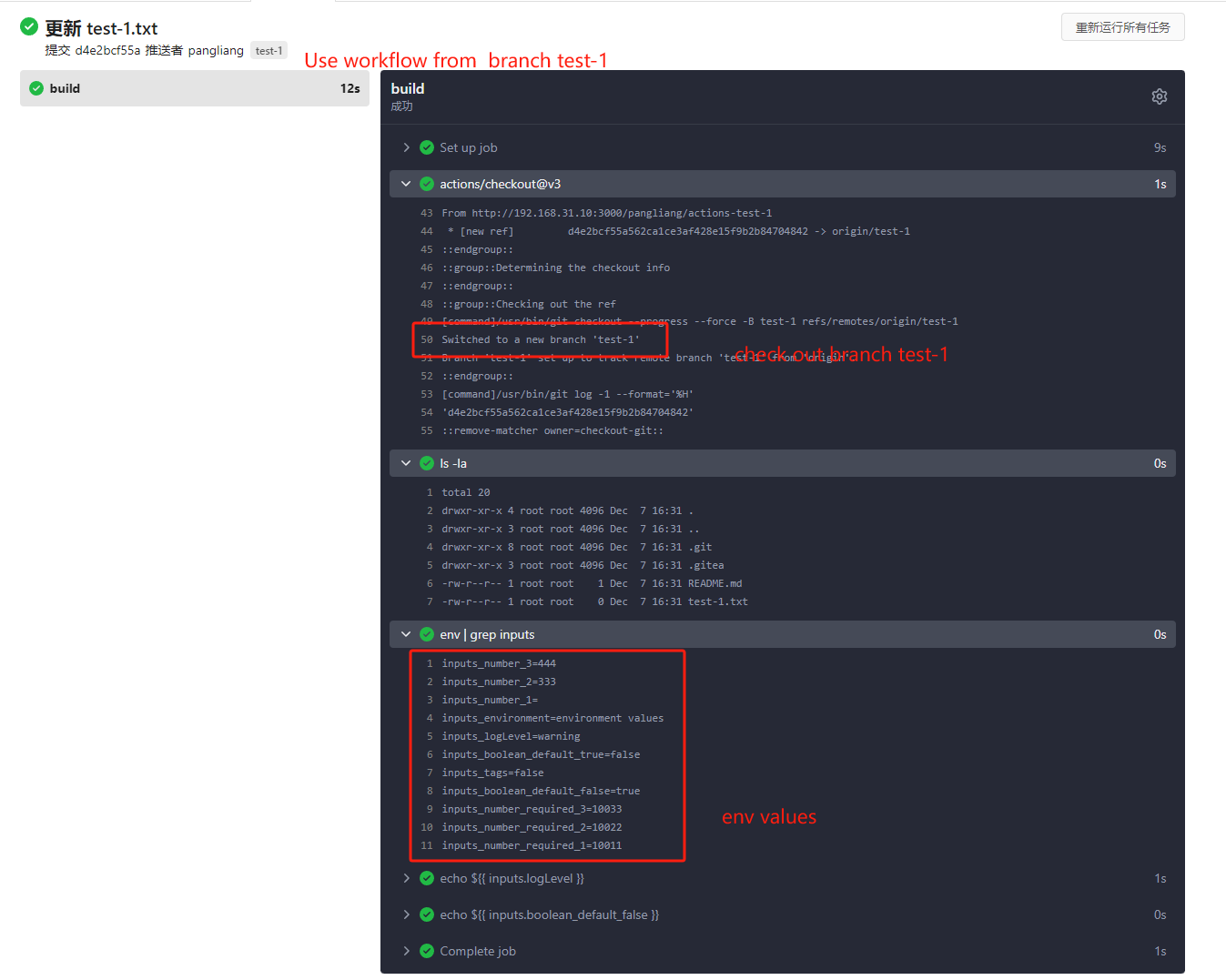

My plan:

* In the `actions.list` method, if workflow is selected and IsAdmin,

check whether the on event contains `workflow_dispatch`. If so, display

a `Run workflow` button to allow the user to manually trigger the run.

* Providing a form that allows users to select target brach or tag, and

these parameters can be configured in yaml

* Simple form validation, `required` input cannot be empty

* Add a route `/actions/run`, and an `actions.Run` method to handle

* Add `WorkflowDispatchPayload` struct to pass the Webhook event payload

to the runner when triggered, this payload carries the `inputs` values

and other fields, doc: [workflow_dispatch

payload](https://docs.github.com/en/webhooks/webhook-events-and-payloads#workflow_dispatch)

Other PRs

* the `Workflow.WorkflowDispatchConfig()` method still return non-nil

when workflow_dispatch is not defined. I submitted a PR

https://gitea.com/gitea/act/pulls/85 to fix it. Still waiting for them

to process.

Behavior should be same with github, but may cause confusion. Here's a

quick reminder.

*

[Doc](https://docs.github.com/en/actions/using-workflows/events-that-trigger-workflows#workflow_dispatch)

Said: This event will `only` trigger a workflow run if the workflow file

is `on the default branch`.

* If the workflow yaml file only exists in a non-default branch, it

cannot be triggered. (It will not even show up in the workflow list)

* If the same workflow yaml file exists in each branch at the same time,

the version of the default branch is used. Even if `Use workflow from`

selects another branch

```yaml

name: Docker Image CI

on:

workflow_dispatch:

inputs:

logLevel:

description: 'Log level'

required: true

default: 'warning'

type: choice

options:

- info

- warning

- debug

tags:

description: 'Test scenario tags'

required: false

type: boolean

boolean_default_true:

description: 'Test scenario tags'

required: true

type: boolean

default: true

boolean_default_false:

description: 'Test scenario tags'

required: false

type: boolean

default: false

environment:

description: 'Environment to run tests against'

type: environment

required: true

default: 'environment values'

number_required_1:

description: 'number '

type: number

required: true

default: '100'

number_required_2:

description: 'number'

type: number

required: true

default: '100'

number_required_3:

description: 'number'

type: number

required: true

default: '100'

number_1:

description: 'number'

type: number

required: false

number_2:

description: 'number'

type: number

required: false

number_3:

description: 'number'

type: number

required: false

env:

inputs_logLevel: ${{ inputs.logLevel }}

inputs_tags: ${{ inputs.tags }}

inputs_boolean_default_true: ${{ inputs.boolean_default_true }}

inputs_boolean_default_false: ${{ inputs.boolean_default_false }}

inputs_environment: ${{ inputs.environment }}

inputs_number_1: ${{ inputs.number_1 }}

inputs_number_2: ${{ inputs.number_2 }}

inputs_number_3: ${{ inputs.number_3 }}

inputs_number_required_1: ${{ inputs.number_required_1 }}

inputs_number_required_2: ${{ inputs.number_required_2 }}

inputs_number_required_3: ${{ inputs.number_required_3 }}

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- run: ls -la

- run: env | grep inputs

- run: echo ${{ inputs.logLevel }}

- run: echo ${{ inputs.boolean_default_false }}

```

---------

Co-authored-by: TKaxv_7S <954067342@qq.com>

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Denys Konovalov <kontakt@denyskon.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fix#31395

This regression is introduced by #30273. To find out how GitHub handles

this case, I did [some

tests](https://github.com/go-gitea/gitea/issues/31395#issuecomment-2278929115).

I use redirect in this PR instead of checking if the corresponding `.md`

file exists when rendering the link because GitHub also uses redirect.

With this PR, there is no need to resolve the raw wiki link when

rendering a wiki page. If a wiki link points to a raw file, access will

be redirected to the raw link.

Fix#31271.

When gogit is enabled, `IsObjectExist` calls

`repo.gogitRepo.ResolveRevision`, which is not correct. It's for

checking references not objects, it could work with commit hash since

it's both a valid reference and a commit object, but it doesn't work

with blob objects.

So it causes #31271 because it reports that all blob objects do not

exist.

Support compression for Actions logs to save storage space and

bandwidth. Inspired by

https://github.com/go-gitea/gitea/issues/24256#issuecomment-1521153015

The biggest challenge is that the compression format should support

[seekable](https://github.com/facebook/zstd/blob/dev/contrib/seekable_format/zstd_seekable_compression_format.md).

So when users are viewing a part of the log lines, Gitea doesn't need to

download the whole compressed file and decompress it.

That means gzip cannot help here. And I did research, there aren't too

many choices, like bgzip and xz, but I think zstd is the most popular

one. It has an implementation in Golang with

[zstd](https://github.com/klauspost/compress/tree/master/zstd) and

[zstd-seekable-format-go](https://github.com/SaveTheRbtz/zstd-seekable-format-go),

and what is better is that it has good compatibility: a seekable format

zstd file can be read by a regular zstd reader.

This PR introduces a new package `zstd` to combine and wrap the two

packages, to provide a unified and easy-to-use API.

And a new setting `LOG_COMPRESSION` is added to the config, although I

don't see any reason why not to use compression, I think's it's a good

idea to keep the default with `none` to be consistent with old versions.

`LOG_COMPRESSION` takes effect for only new log files, it adds `.zst` as

an extension to the file name, so Gitea can determine if it needs

decompression according to the file name when reading. Old files will

keep the format since it's not worth converting them, as they will be

cleared after #31735.

<img width="541" alt="image"

src="https://github.com/user-attachments/assets/e9598764-a4e0-4b68-8c2b-f769265183c9">

Found at

https://github.com/go-gitea/gitea/pull/31790#issuecomment-2272898915

`unit-tests-gogit` never work since the workflow set `TAGS` with

`gogit`, but the Makefile use `TEST_TAGS`.

This PR adds the values of `TAGS` to `TEST_TAGS`, ensuring that setting

`TAGS` is always acceptable and avoiding confusion about which one

should be set.

Fix#31738

When pushing a new branch, the old commit is zero. Most git commands

cannot recognize the zero commit id. To get the changed files in the

push, we need to get the first diverge commit of this branch. In most

situations, we could check commits one by one until one commit is

contained by another branch. Then we will think that commit is the

diverge point.

And in a pre-receive hook, this will be more difficult because all

commits haven't been merged and they actually stored in a temporary

place by git. So we need to bring some envs to let git know the commit

exist.

close #27031

If the rpm package does not contain a matching gpg signature, the

installation will fail. See (#27031) , now auto-signing rpm uploads.

This option is turned off by default for compatibility.

If the assign the pull request review to a team, it did not show the

members of the team in the "requested_reviewers" field, so the field was

null. As a solution, I added the team members to the array.

fix#31764

Fix#31137.

Replace #31623#31697.

When migrating LFS objects, if there's any object that failed (like some

objects are losted, which is not really critical), Gitea will stop

migrating LFS immediately but treat the migration as successful.

This PR checks the error according to the [LFS api

doc](https://github.com/git-lfs/git-lfs/blob/main/docs/api/batch.md#successful-responses).

> LFS object error codes should match HTTP status codes where possible:

>

> - 404 - The object does not exist on the server.

> - 409 - The specified hash algorithm disagrees with the server's

acceptable options.

> - 410 - The object was removed by the owner.

> - 422 - Validation error.

If the error is `404`, it's safe to ignore it and continue migration.

Otherwise, stop the migration and mark it as failed to ensure data

integrity of LFS objects.

And maybe we should also ignore others errors (maybe `410`? I'm not sure

what's the difference between "does not exist" and "removed by the

owner".), we can add it later when some users report that they have

failed to migrate LFS because of an error which should be ignored.

See discussion on #31561 for some background.

The introspect endpoint was using the OIDC token itself for

authentication. This fixes it to use basic authentication with the

client ID and secret instead:

* Applications with a valid client ID and secret should be able to

successfully introspect an invalid token, receiving a 200 response

with JSON data that indicates the token is invalid

* Requests with an invalid client ID and secret should not be able

to introspect, even if the token itself is valid

Unlike #31561 (which just future-proofed the current behavior against

future changes to `DISABLE_QUERY_AUTH_TOKEN`), this is a potential

compatibility break (some introspection requests without valid client

IDs that would previously succeed will now fail). Affected deployments

must begin sending a valid HTTP basic authentication header with their

introspection requests, with the username set to a valid client ID and

the password set to the corresponding client secret.

When you are entering a number in the issue search, you likely want the

issue with the given ID (code internal concept: issue index).

As such, when a number is detected, the issue with the corresponding ID

will now be added to the results.

Fixes#4479

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Make it posible to let mails show e.g.:

`Max Musternam (via gitea.kithara.com) <gitea@kithara.com>`

Docs: https://gitea.com/gitea/docs/pulls/23

---

*Sponsored by Kithara Software GmbH*

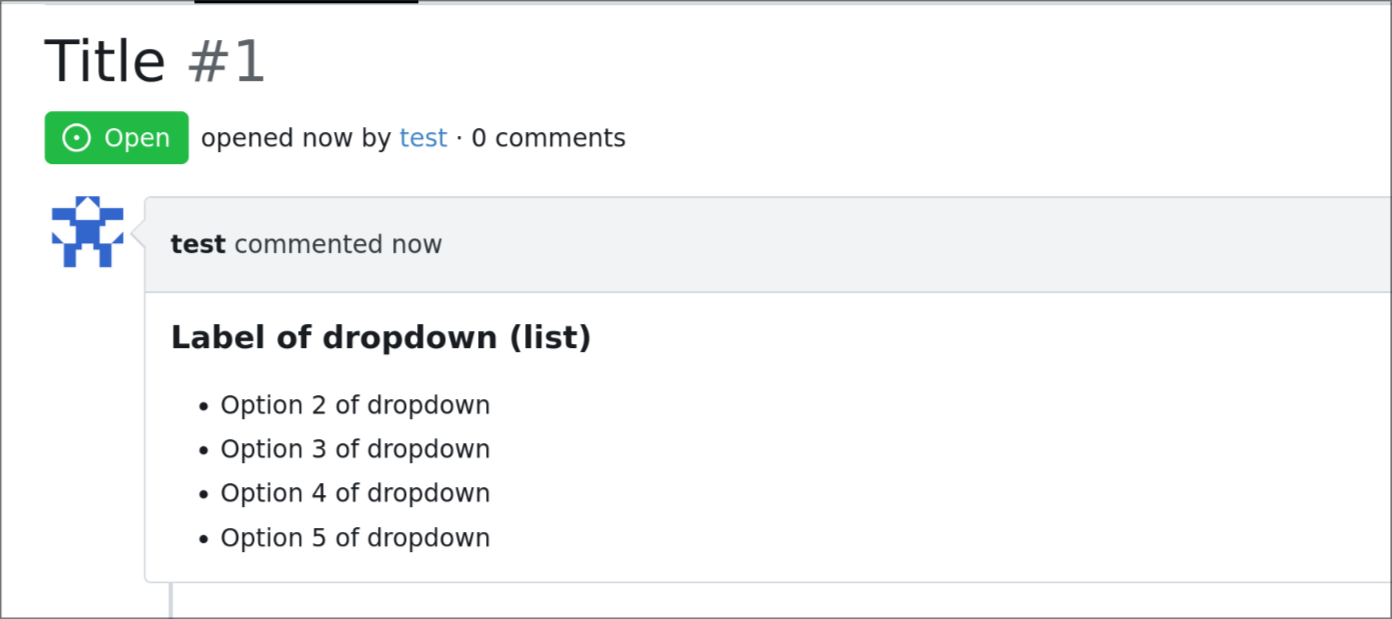

Issue template dropdown can have many entries, and it could be better to

have them rendered as list later on if multi-select is enabled.

so this adds an option to the issue template engine to do so.

DOCS: https://gitea.com/gitea/docs/pulls/19

---

## demo:

```yaml

name: Name

title: Title

about: About

labels: ["label1", "label2"]

ref: Ref

body:

- type: dropdown

id: id6

attributes:

label: Label of dropdown (list)

description: Description of dropdown

multiple: true

list: true

options:

- Option 1 of dropdown

- Option 2 of dropdown

- Option 3 of dropdown

- Option 4 of dropdown

- Option 5 of dropdown

- Option 6 of dropdown

- Option 7 of dropdown

- Option 8 of dropdown

- Option 9 of dropdown

```

---

*Sponsored by Kithara Software GmbH*

We have some instances that only allow using an external authentication

source for authentication. In this case, users changing their email,

password, or linked OpenID connections will not have any effect, and

we'd like to prevent showing that to them to prevent confusion.

Included in this are several changes to support this:

* A new setting to disable user managed authentication credentials

(email, password & OpenID connections)

* A new setting to disable user managed MFA (2FA codes & WebAuthn)

* Fix an issue where some templates had separate logic for determining

if a feature was disabled since it didn't check the globally disabled

features

* Hide more user setting pages in the navbar when their settings aren't

enabled

---------

Co-authored-by: Kyle D <kdumontnu@gmail.com>

Fixes#22722

### Problem

Currently, it is not possible to force push to a branch with branch

protection rules in place. There are often times where this is necessary

(CI workflows/administrative tasks etc).

The current workaround is to rename/remove the branch protection,

perform the force push, and then reinstate the protections.

### Solution

Provide an additional section in the branch protection rules to allow

users to specify which users with push access can also force push to the

branch. The default value of the rule will be set to `Disabled`, and the

UI is intuitive and very similar to the `Push` section.

It is worth noting in this implementation that allowing force push does

not override regular push access, and both will need to be enabled for a

user to force push.

This applies to manual force push to a remote, and also in Gitea UI

updating a PR by rebase (which requires force push)

This modifies the `BranchProtection` API structs to add:

- `enable_force_push bool`

- `enable_force_push_whitelist bool`

- `force_push_whitelist_usernames string[]`

- `force_push_whitelist_teams string[]`

- `force_push_whitelist_deploy_keys bool`

### Updated Branch Protection UI:

<img width="943" alt="image"

src="https://github.com/go-gitea/gitea/assets/79623665/7491899c-d816-45d5-be84-8512abd156bf">

### Pull Request `Update branch by Rebase` option enabled with source

branch `test` being a protected branch:

<img width="1038" alt="image"

src="https://github.com/go-gitea/gitea/assets/79623665/57ead13e-9006-459f-b83c-7079e6f4c654">

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Running git update-index for every individual file is slow, so add and

remove everything with a single git command.

When such a big commit lands in the default branch, it could cause PR

creation and patch checking for all open PRs to be slow, or time out

entirely. For example, a commit that removes 1383 files was measured to

take more than 60 seconds and timed out. With this change checking took

about a second.

This is related to #27967, though this will not help with commits that

change many lines in few files.

closes#22015

After adding a passkey, you can now simply login with it directly by

clicking `Sign in with a passkey`.

Note for testing. You need to run gitea using `https` to get the full

passkeys experience.

---------

Co-authored-by: silverwind <me@silverwind.io>

Support legacy _links LFS batch response.

Fixes#31512.

This is backwards-compatible change to the LFS client so that, upon

mirroring from an upstream which has a batch api, it can download

objects whether the responses contain the `_links` field or its

successor the `actions` field. When Gitea must fallback to the legacy

`_links` field a logline is emitted at INFO level which looks like this:

```

...s/lfs/http_client.go:188:performOperation() [I] <LFSPointer ee95d0a27ccdfc7c12516d4f80dcf144a5eaf10d0461d282a7206390635cdbee:160> is using a deprecated batch schema response!

```

I've only run `test-backend` with this code, but added a new test to

cover this case. Additionally I have a fork with this change deployed

which I've confirmed syncs LFS from Gitea<-Artifactory (which has legacy

`_links`) as well as from Gitea<-Gitea (which has the modern `actions`).

Signed-off-by: Royce Remer <royceremer@gmail.com>

This change fixes cases when a Wiki page refers to a video stored in the

Wiki repository using relative path. It follows the similar case which

has been already implemented for images.

Test plan:

- Create repository and Wiki page

- Clone the Wiki repository

- Add video to it, say `video.mp4`

- Modify the markdown file to refer to the video using `<video

src="video.mp4">`

- Commit the Wiki page

- Observe that the video is properly displayed

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

This PR modifies the structs for editing and creating org teams to allow

team names to be up to 255 characters. The previous maximum length was

30 characters.

This PR only does "renaming":

* `Route` should be `Router` (and chi router is also called "router")

* `Params` should be `PathParam` (to distingush it from URL query param, and to match `FormString`)

* Use lower case for private functions to avoid exposing or abusing

Parse base path and tree path so that media links can be correctly

created with /media/.

Resolves#31294

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fix#31361, and add tests

And this PR introduces an undocumented & debug-purpose-only config

option: `USE_SUB_URL_PATH`. It does nothing for end users, it only helps

the development of sub-path related problems.

And also fix#31366

Co-authored-by: @ExplodingDragon

Fix#31327

This is a quick patch to fix the bug.

Some parameters are using 0, some are using -1. I think it needs a

refactor to keep consistent. But that will be another PR.

The PR replaces all `goldmark/util.BytesToReadOnlyString` with

`util.UnsafeBytesToString`, `goldmark/util.StringToReadOnlyBytes` with

`util.UnsafeStringToBytes`. This removes one `TODO`.

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Enable [unparam](https://github.com/mvdan/unparam) linter.

Often I could not tell the intention why param is unused, so I put

`//nolint` for those cases like webhook request creation functions never

using `ctx`.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: delvh <dev.lh@web.de>

This solution implements a new config variable MAX_ROWS, which

corresponds to the “Maximum allowed rows to render CSV files. (0 for no

limit)” and rewrites the Render function for CSV files in markup module.

Now the render function only reads the file once, having MAX_FILE_SIZE+1

as a reader limit and MAX_ROWS as a row limit. When the file is larger

than MAX_FILE_SIZE or has more rows than MAX_ROWS, it only renders until

the limit, and displays a user-friendly warning informing that the

rendered data is not complete, in the user's language.

---

Previously, when a CSV file was larger than the limit, the render

function lost its function to render the code. There were also multiple

reads to the file, in order to determine its size and render or

pre-render.

The warning:

This PR implemented object storages(LFS/Packages/Attachments and etc.)

for Azure Blob Storage. It depends on azure official golang SDK and can

support both the azure blob storage cloud service and azurite mock

server.

Replace #25458Fix#22527

- [x] CI Tests

- [x] integration test, MSSQL integration tests will now based on

azureblob

- [x] unit test

- [x] CLI Migrate Storage

- [x] Documentation for configuration added

------

TODO (other PRs):

- [ ] Improve performance of `blob download`.

---------

Co-authored-by: yp05327 <576951401@qq.com>

We wanted to be able to use the IAM role provided by the EC2 instance

metadata in order to access S3 via the Minio configuration. To do this,

a new credentials chain is added that will check the following locations

for credentials when an access key is not provided. In priority order,

they are:

1. MINIO_ prefixed environment variables

2. AWS_ prefixed environment variables

3. a minio credentials file

4. an aws credentials file

5. EC2 instance metadata

This PR split the `Board` into two parts. One is the struct has been

renamed to `Column` and the second we have a `Template Type`.

But to make it easier to review, this PR will not change the database

schemas, they are just renames. The database schema changes could be in

future PRs.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: yp05327 <576951401@qq.com>

This PR adds some fields to the gitea webhook payload that

[openproject](https://www.openproject.org/) expects to exists in order

to process the webhooks.

These fields do exists in Github's webhook payload so adding them makes

Gitea's native webhook more compatible towards Github's.

Remove "EncodeSha1", it shouldn't be used as a general purpose hasher

(just like we have removed "EncodeMD5" in #28622)

Rewrite the "time-limited code" related code and write better tests, the

old code doesn't seem quite right.

By the way:

* Re-format the "color.go" to Golang code style

* Remove unused `overflow-y: scroll;` from `.project-column` because

there is `overflow: visible`

Fix#31002

1. Mention Make sure `Host` and `X-Fowarded-Proto` headers are correctly passed to Gitea

2. Clarify the basic requirements and move the "general configuration" to the top

3. Add a comment for the "container registry"

4. Use 1.21 behavior if the reverse proxy is not correctly configured

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Add a configuration item to enable S3 virtual-hosted style (V2) to solve

the problem caused by some S3 service providers not supporting path

style (V1).

Merging PR may fail because of various problems. The pull request may

have a dirty state because there is no transaction when merging a pull

request. ref

https://github.com/go-gitea/gitea/pull/25741#issuecomment-2074126393

This PR moves all database update operations to post-receive handler for

merging a pull request and having a database transaction. That means if

database operations fail, then the git merging will fail, the git client

will get a fail result.

There are already many tests for pull request merging, so we don't need

to add a new one.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

The test had a dependency on `https://api.pwnedpasswords.com` which

caused many failures on CI recently:

```

--- FAIL: TestPassword (2.37s)

pwn_test.go:41: Get "https://api.pwnedpasswords.com/range/e6b6a": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

FAIL

coverage: 82.9% of statements

```

Suggested by logs in #30729

- Remove `math/rand.Seed`

`rand.Seed is deprecated: As of Go 1.20 there is no reason to call Seed

with a random value.`

- Replace `math/rand.Read`

`rand.Read is deprecated: For almost all use cases, [crypto/rand.Read]

is more appropriate.`

- Replace `math/rand` with `math/rand/v2`, which is available since Go

1.22

Follow #30472:

When a user is created by command line `./gitea admin user create`:

Old behavior before #30472: the first user (admin or non-admin) doesn't

need to change password.

Revert to the old behavior before #30472

Misspell 0.5.0 supports passing a csv file to extend the list of

misspellings, so I added some common ones from the codebase. There is at

least one typo in a API response so we need to decided whether to revert

that and then likely remove the dict entry.

Great thanks to @oliverpool for figuring out the problem and proposing a

fix.

Regression of #28138

Incorrect hash causes the user's LFS files get all deleted when running

`doctor fix all`

(by the way, remove unused/non-standard comments)

Co-authored-by: Giteabot <teabot@gitea.io>

Follow #30454

And fix#24957

When using "preferred_username", if no such field,

`extractUserNameFromOAuth2` (old `getUserName`) shouldn't return an

error. All other USERNAME options do not return such error.

And fine tune some logic and error messages, make code more stable and

more friendly to end users.

Initial support for #25680

This PR only adds some simple styles from GitHub, it is big enough and

it focuses on adding the necessary framework-level supports. More styles

could be fine-tuned later.

Should resolve#30642.

Before this commit, we were treating an empty `?sort=` query parameter

as the correct sorting type (which is to sort issues in descending order

by their created UNIX time). But when we perform `sort=latest`, we did

not include this as a type so we would sort by the most recently updated

when reaching the `default` switch statement block.

This commit fixes this by considering the empty string, "latest", and

just any other string that is not mentioned in the switch statement as

sorting by newest.

Fix#30643

The old test code is not stable due to the data-race described in the

TODO added at that time.

Make it stable, and remove a debug-only field from old test code.

Noteable additions:

- `redefines-builtin-id` forbid variable names that shadow go builtins

- `empty-lines` remove unnecessary empty lines that `gofumpt` does not

remove for some reason

- `superfluous-else` eliminate more superfluous `else` branches

Rules are also sorted alphabetically and I cleaned up various parts of

`.golangci.yml`.

Related to #30375.

It doesn't make sense to import `modules/web/middleware` and

`modules/setting` in `modules/web/session` since the last one is more

low-level.

And it looks like a workaround to call `DeleteLegacySiteCookie` in

`RegenerateSession`, so maybe we could reverse the importing by

registering hook functions.

Support downloading nuget nuspec manifest[^1]. This is useful for

renovate because it uses this api to find the corresponding repository

- Store nuspec along with nupkg on upload

- allow downloading nuspec

- add doctor command to add missing nuspec files

[^1]:

https://learn.microsoft.com/en-us/nuget/api/package-base-address-resource#download-package-manifest-nuspec

---------

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Using the API, a user's _source_id_ can be set in the _CreateUserOption_

model, but the field is not returned in the _User_ model.

This PR updates the _User_ model to include the field _source_id_ (The

ID of the Authentication Source).

- Add new `Compare` struct to represent comparison between two commits

- Introduce new API endpoint `/compare/*` to get commit comparison

information

- Create new file `repo_compare.go` with the `Compare` struct definition

- Add new file `compare.go` in `routers/api/v1/repo` to handle

comparison logic

- Add new file `compare.go` in `routers/common` to define `CompareInfo`

struct

- Refactor `ParseCompareInfo` function to use `common.CompareInfo`

struct

- Update Swagger documentation to include the new API endpoint for

commit comparison

- Remove duplicate `CompareInfo` struct from

`routers/web/repo/compare.go`

- Adjust base path in Swagger template to be relative (`/api/v1`)

GitHub API

https://docs.github.com/en/rest/commits/commits?apiVersion=2022-11-28#compare-two-commits

---------

Signed-off-by: Bo-Yi Wu <appleboy.tw@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Cookies may exist on "/subpath" and "/subpath/" for some legacy reasons (eg: changed CookiePath behavior in code). The legacy cookie should be removed correctly.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Kyle D <kdumontnu@gmail.com>

Since https://github.com/go-gitea/gitea/pull/25686, a few `interface{}`

have sneaked into the codebase. Add this replacement to `make fmt` to

prevent this from happening again.

Ideally a linter would do this, but I haven't found any suitable.

1. Check whether the label is for an issue or a pull request.

2. Don't use space to layout

3. Make sure the test strings have trailing spaces explicitly, to avoid

some IDE removing the trailing spaces automatically.

In Wiki pages, short-links created to local Wiki files were always

expanded as regular Wiki Links. In particular, if a link wanted to point

to a file that Gitea doesn't know how to render (e.g, a .zip file), a

user following the link would be silently redirected to the Wiki's home

page.

This change makes short-links* in Wiki pages be expanded to raw wiki

links, so these local wiki files may be accessed without manually

accessing their URL.

* only short-links ending in a file extension that isn't renderable are

affected.

Closes#27121.

Signed-off-by: Rafael Girão <rafael.s.girao@tecnico.ulisboa.pt>

Co-authored-by: silverwind <me@silverwind.io>

Agit returned result should be from `ProcReceive` hook but not

`PostReceive` hook. Then for all non-agit pull requests, it will not

check the pull requests for every pushing `refs/pull/%d/head`.

Fix#29074 (allow to disable all builtin apps) and don't make the doctor

command remove the builtin apps.

By the way, rename refobject and joincond to camel case.

1. The previous color contrast calculation function was incorrect at

least for the `#84b6eb` where it output low-contrast white instead of

black. I've rewritten these functions now to accept hex colors and to

match GitHub's calculation and to output pure white/black for maximum

contrast. Before and after:

<img width="94" alt="Screenshot 2024-04-02 at 01 53 46"

src="https://github.com/go-gitea/gitea/assets/115237/00b39e15-a377-4458-95cf-ceec74b78228"><img

width="90" alt="Screenshot 2024-04-02 at 01 51 30"

src="https://github.com/go-gitea/gitea/assets/115237/1677067a-8d8f-47eb-82c0-76330deeb775">

2. Fix project-related issues:

- Expose the new `ContrastColor` function as template helper and use it

for project cards, replacing the previous JS solution which eliminates a

flash of wrong color on page load.

- Fix a bug where if editing a project title, the counter would get

lost.

- Move `rgbToHex` function to color utils.

@HesterG fyi

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Giteabot <teabot@gitea.io>

It doesn't change logic, it only does:

1. Rename the variable and function names

2. Use more consistent format when mentioning config section&key

3. Improve some messages

This allows you to hide the "Powered by" text in footer via

`SHOW_FOOTER_POWERED_BY` flag in configuration.

---------

Co-authored-by: silverwind <me@silverwind.io>

Major changes:

* Move some functions like "addReader" / "isSubDir" /

"addRecursiveExclude" to a separate package, and add tests

* Clarify the filename&dump type logic and add tests

* Clarify the logger behavior and remove FIXME comments

Co-authored-by: Giteabot <teabot@gitea.io>

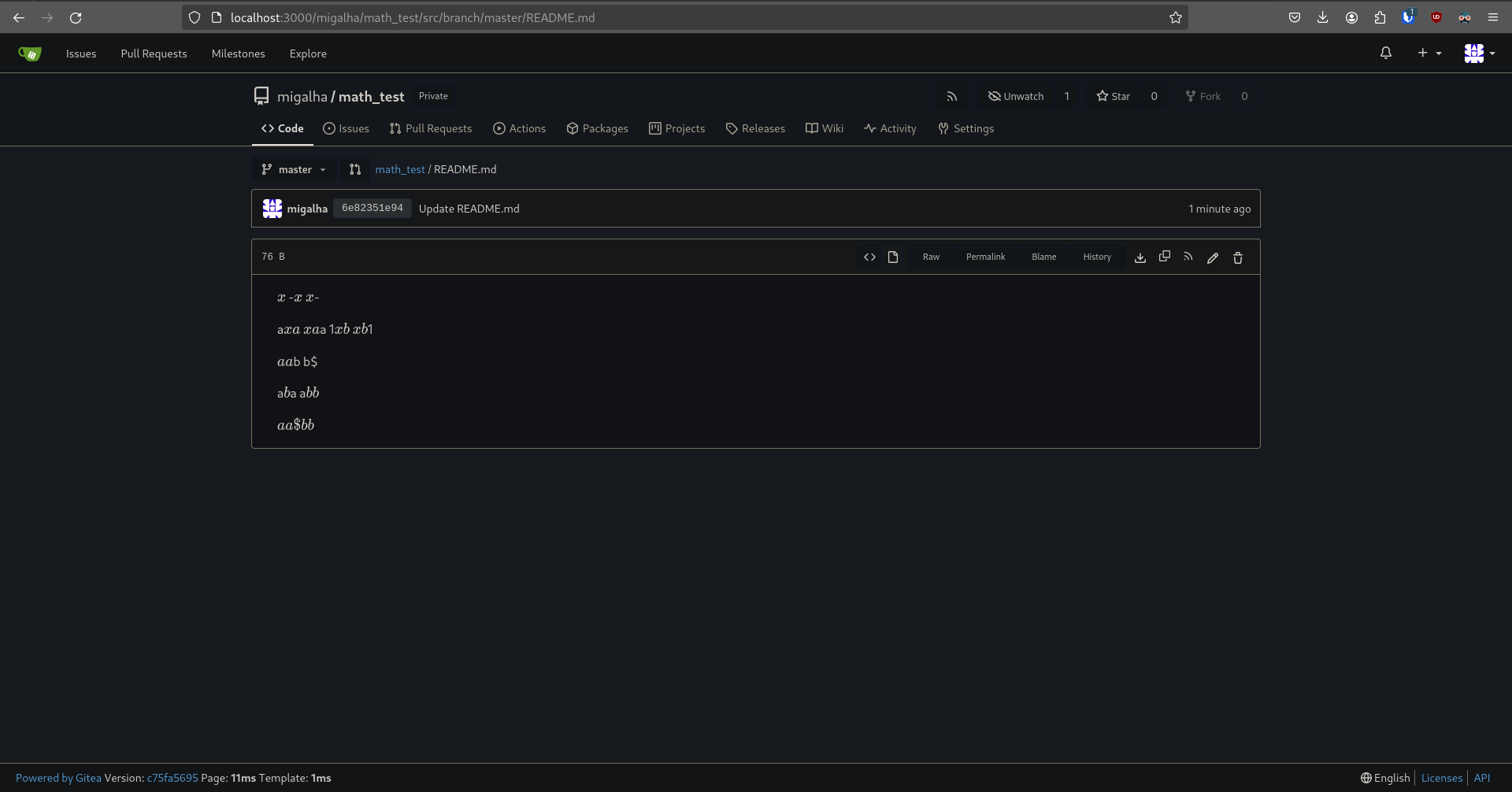

- Inline math blocks couldn't be preceeded or succeeded by

alphanumerical characters due to changes introduced in PR #21171.

Removed the condition that caused this (precedingCharacter condition)

and added a new exit condition of the for-loop that checks if a specific

'$' was escaped using '\' so that the math expression can be rendered as

intended.

- Additionally this PR fixes another bug where math blocks of the type

'$xyz$abc$' where the dollar sign was not escaped by the user, generated

an error (shown in the screenshots below)

- Altered the tests to accomodate for the changes

Former behaviour (from try.gitea.io):

Fixed behaviour (from my local build):

(Edit) Source code for the README.md file:

```

$x$ -$x$ $x$-

a$xa$ $xa$a 1$xb$ $xb$1

$a a$b b$

a$b $a a$b b$

$a a\$b b$

```

---------

Signed-off-by: João Tiago <joao.leal.tintas@tecnico.ulisboa.pt>

Co-authored-by: Giteabot <teabot@gitea.io>

To make it more flexible and support SSH signature.

The existing tests are not changed, there are also tests covering

`parseTagRef` which also calls `parsePayloadSignature` now. Add some new

tests to `Test_parseTagData`

When reviewing PRs, some color names might be mentioned, the

`transformCodeSpan` (which calls `css.ColorHandler`) considered it as a

valid color, but actually it shouldn't be rendered as a color codespan.

## Changes

- Adds setting `EXTERNAL_USER_DISABLE_FEATURES` to disable any supported

user features when login type is not plain

- In general, this is necessary for SSO implementations to avoid

inconsistencies between the external account management and the linked

account

- Adds helper functions to encourage correct use

Previously, the default was a week.

As most instances don't set the setting, this leads to a bad user

experience by default.

## ⚠️ Breaking

If your instance requires a high level of security,

you may want to set `[security].LOGIN_REMEMBER_DAYS` so that logins are

not valid as long.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

This PR uses `db.ListOptions` instead of `Paginor` to make the code

simpler.

And it also fixed the performance problem when viewing /pulls or

/issues. Before the counting in fact will also do the search.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: silverwind <me@silverwind.io>